No Halloween this year

I used to love Halloween growing up, not so much the dressing up part but the knocking on doors and getting handed fist fulls of candy. Now, as an adult, I love returning the favor and always think about giving out larger than average candy and chocolate.

But not this year, thanks to COVID-19.

Hopefully 2020 will be the one and only year that we skip Halloween …

Starting writing my first e-book

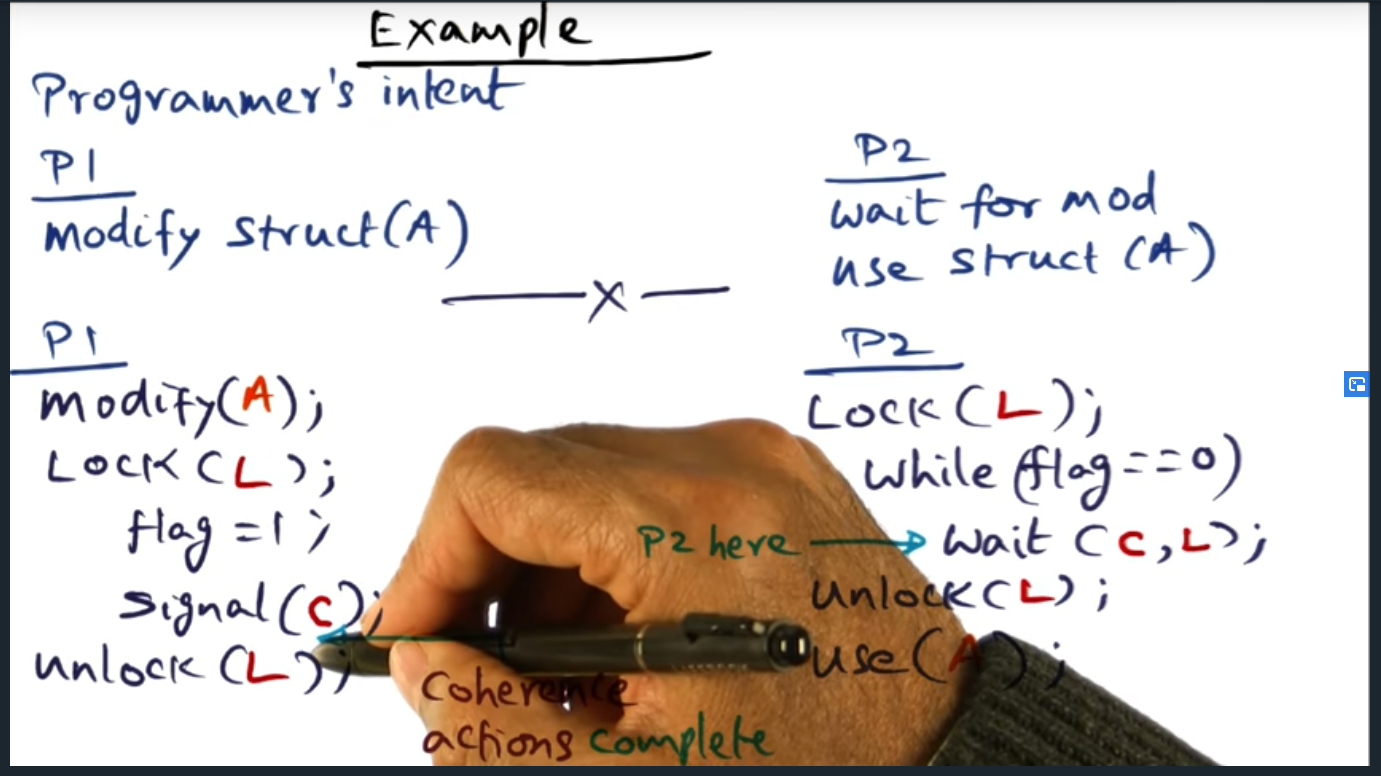

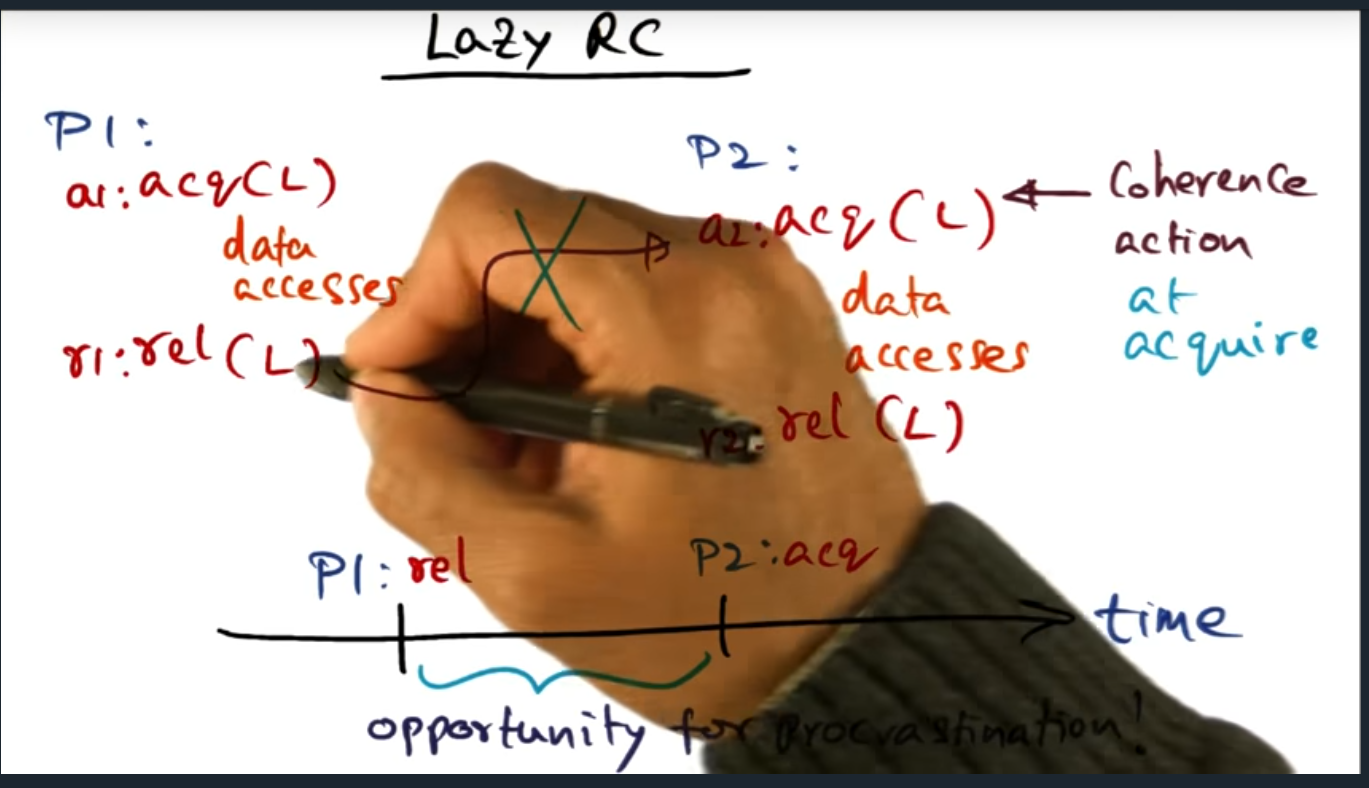

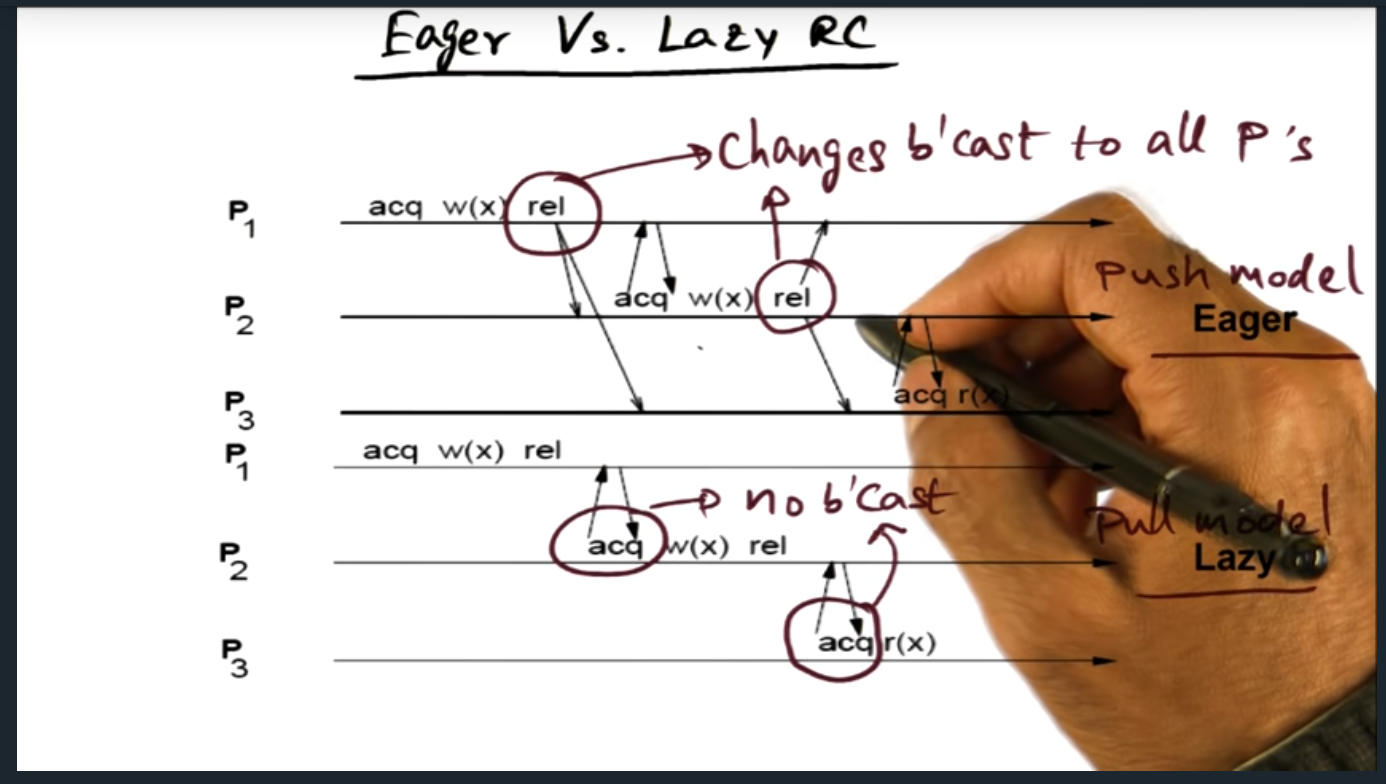

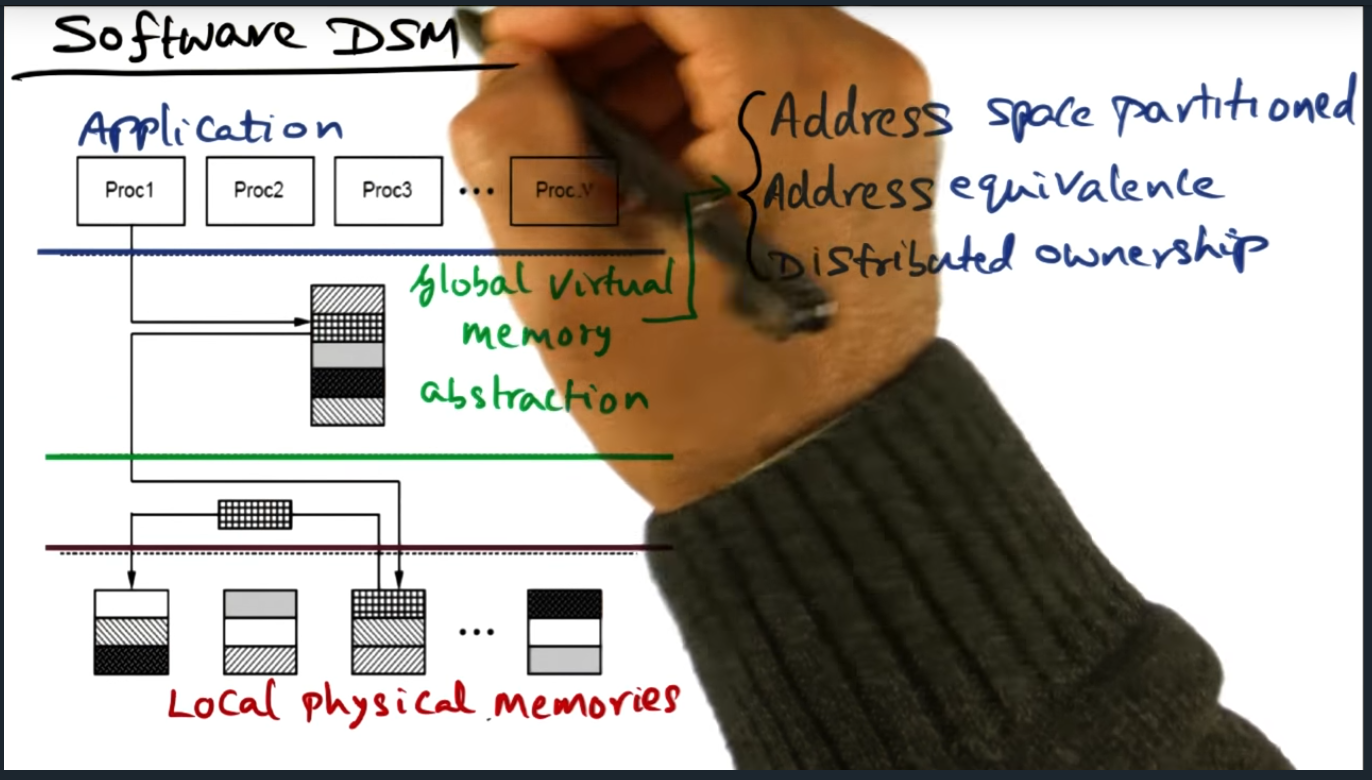

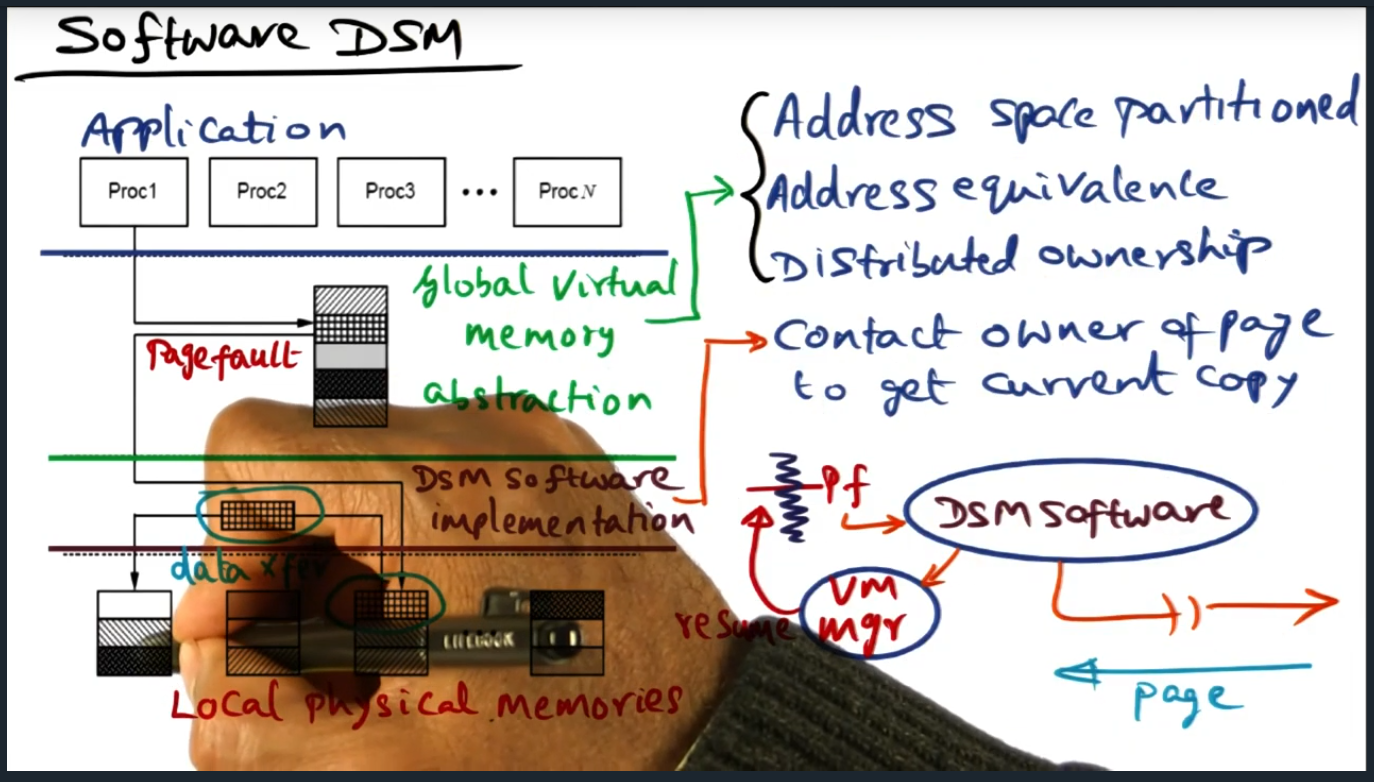

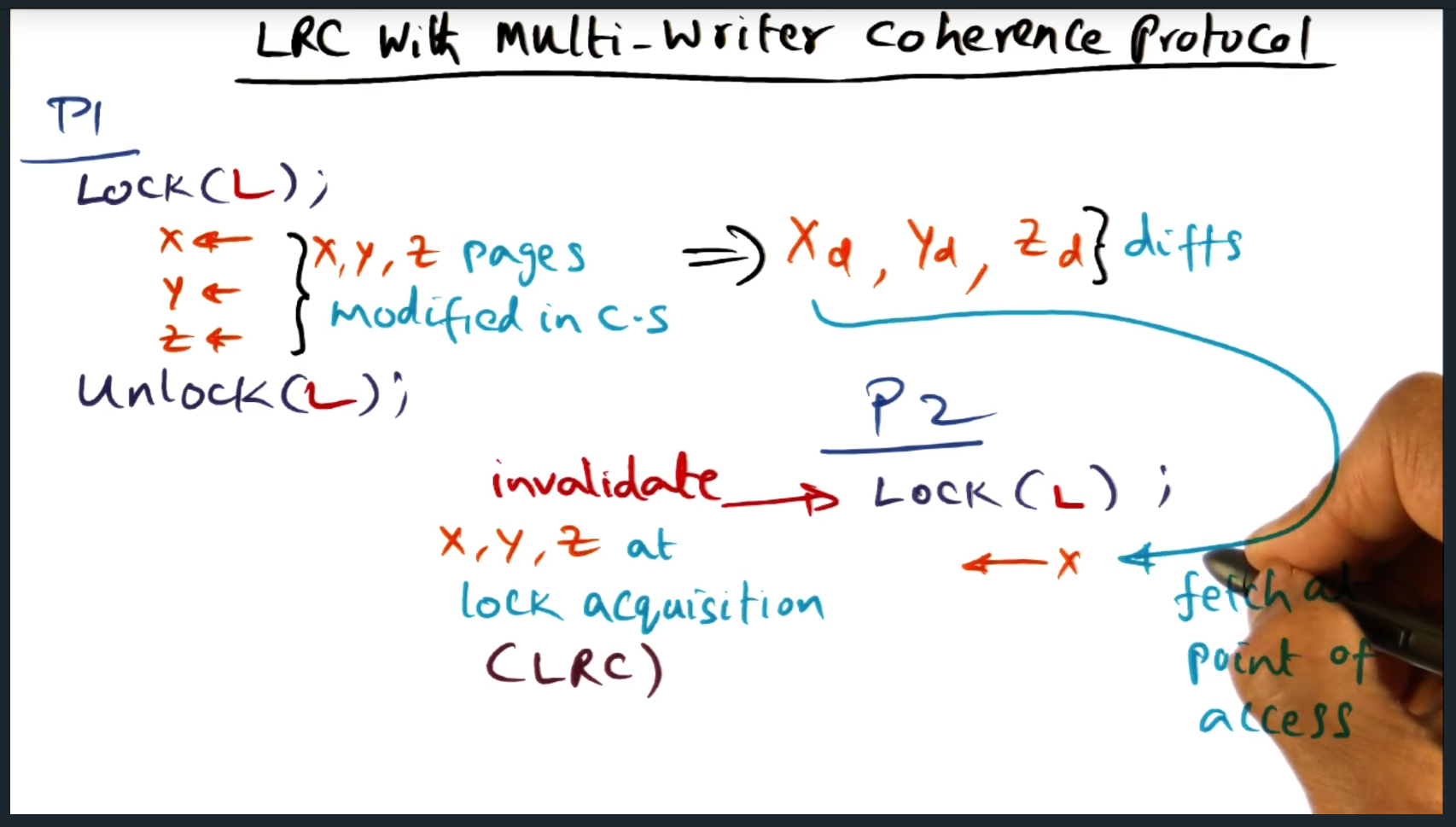

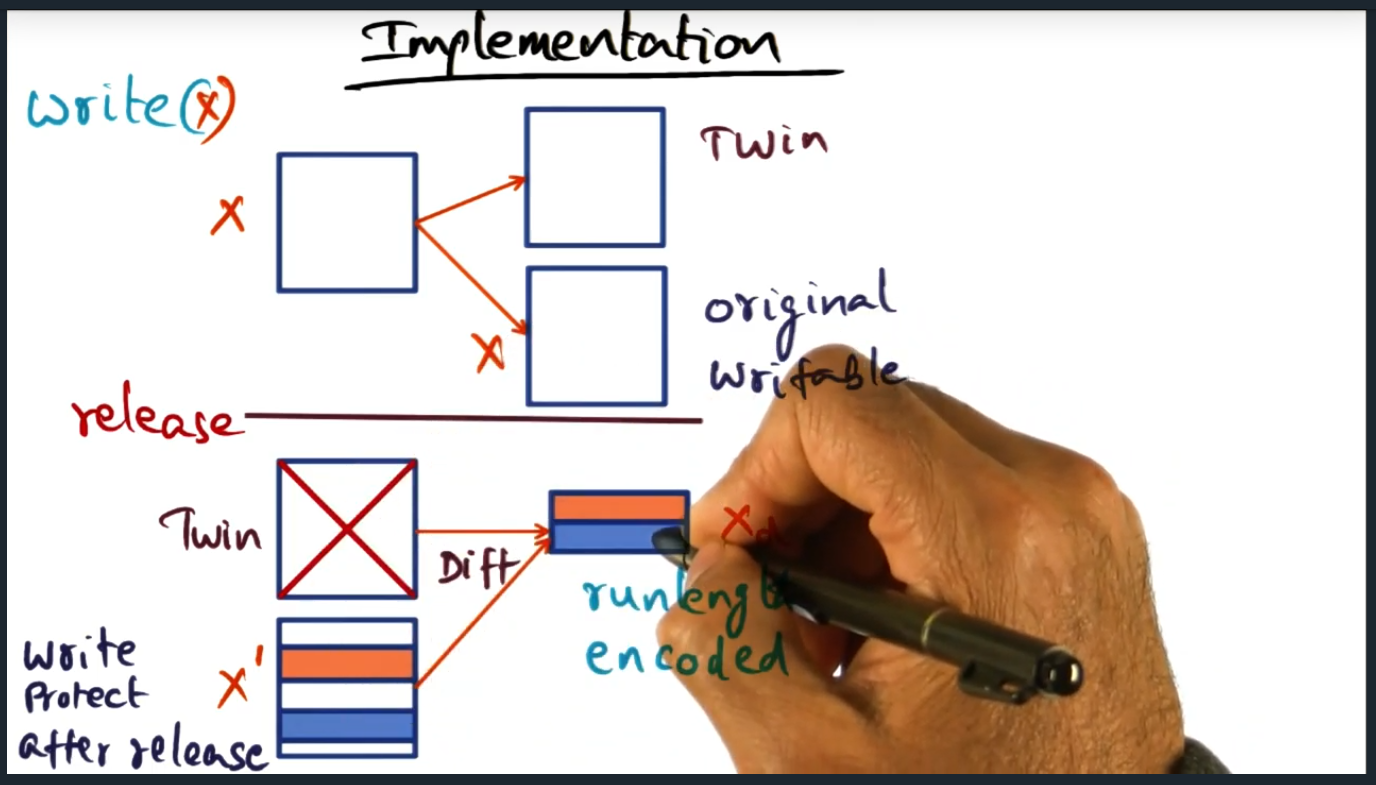

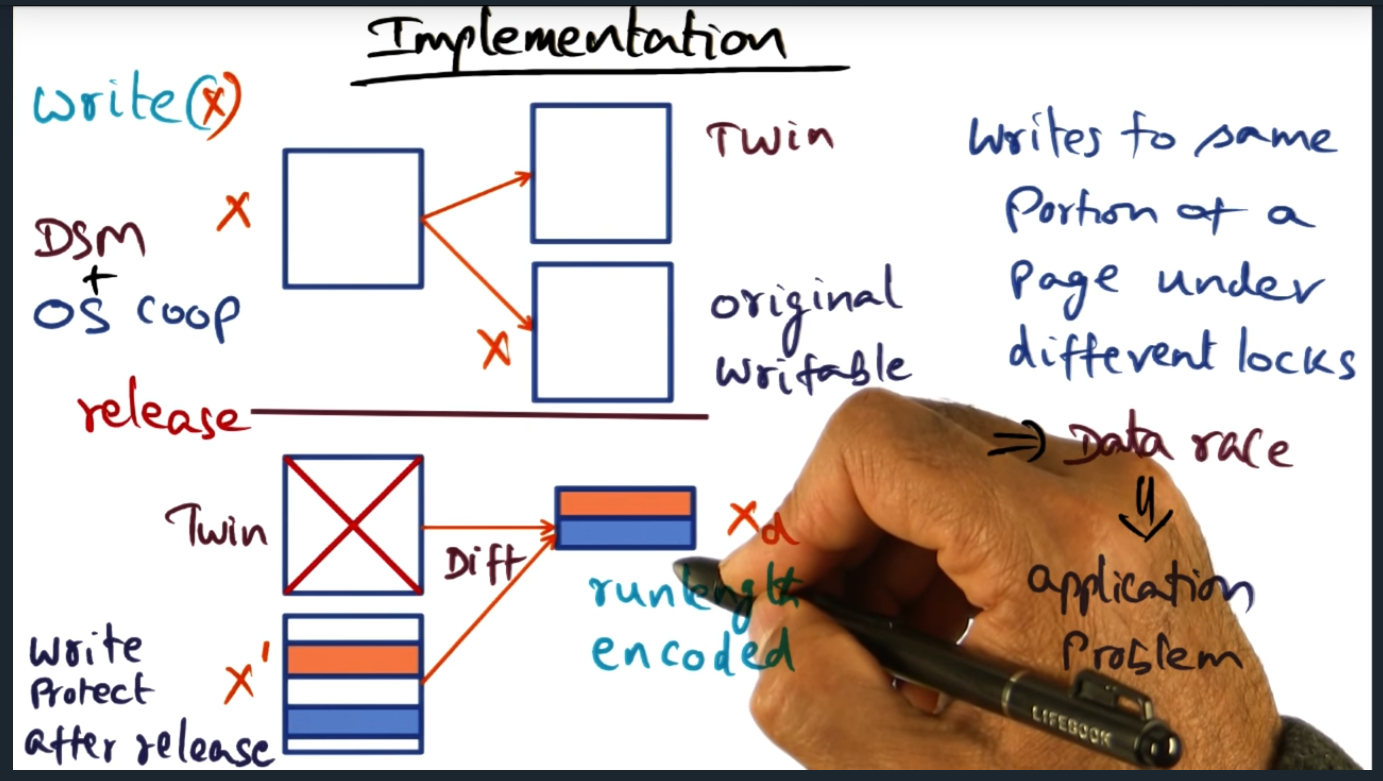

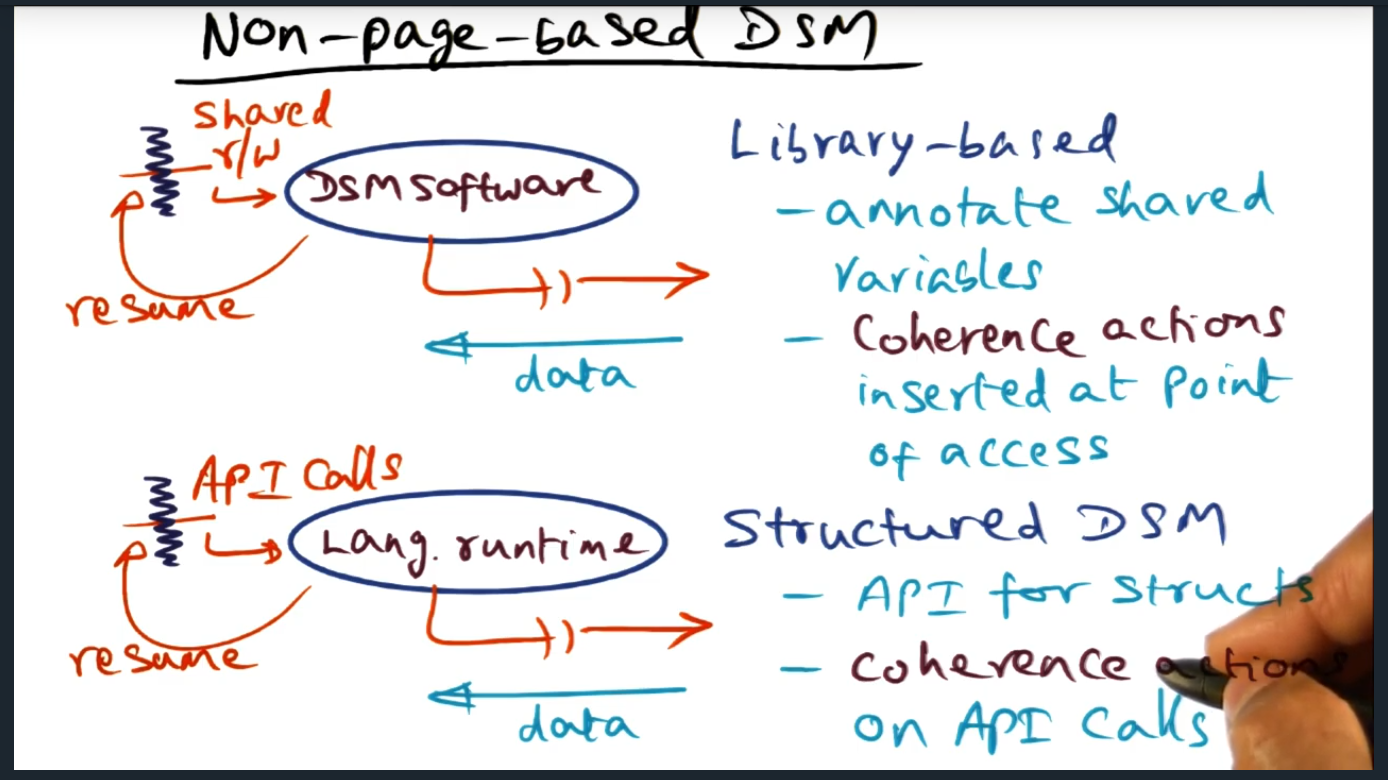

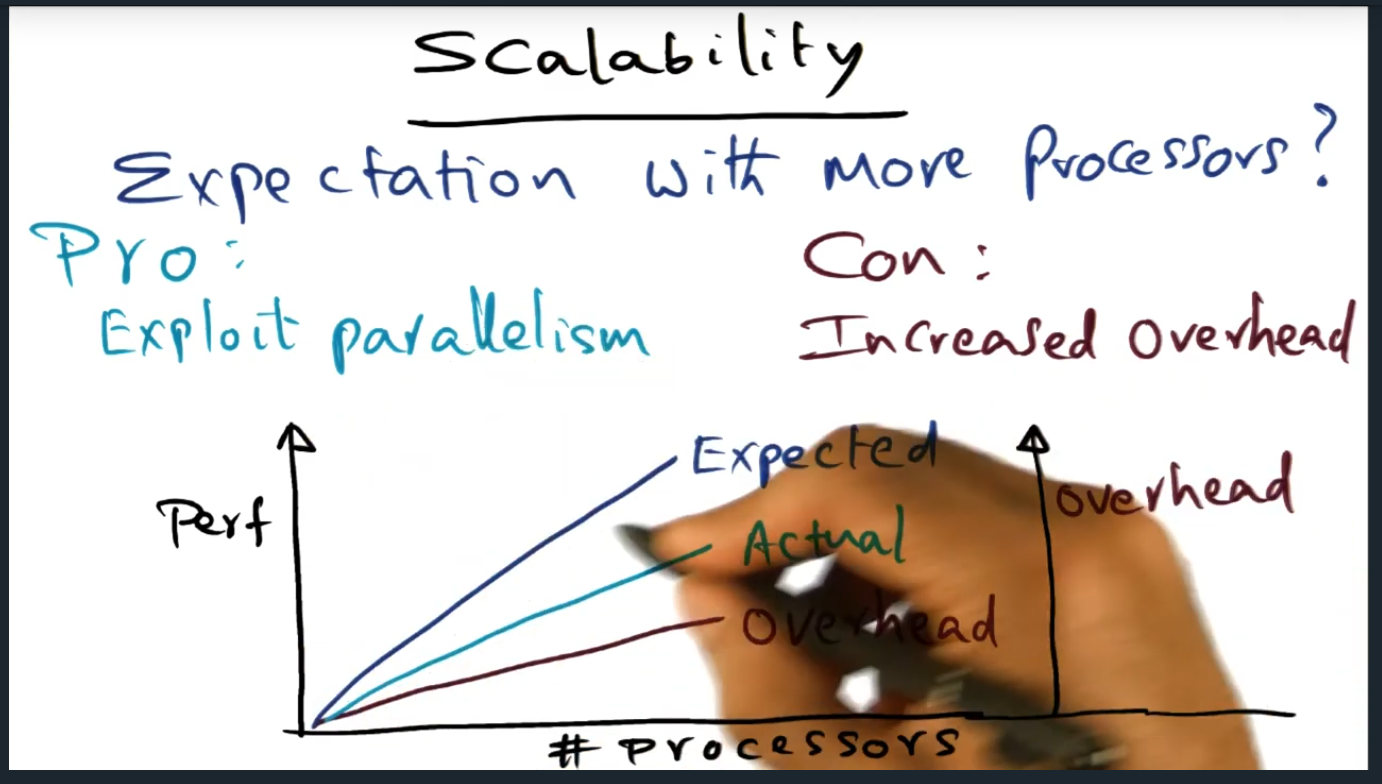

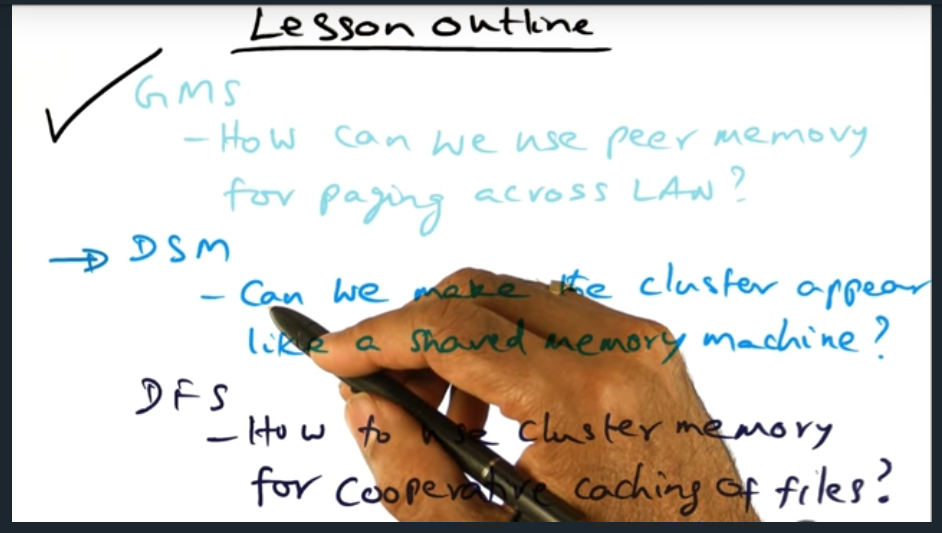

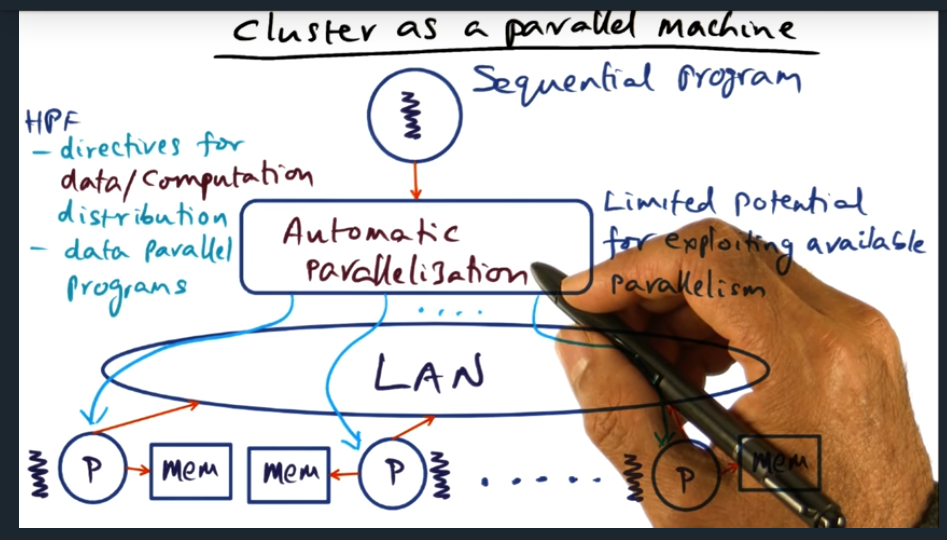

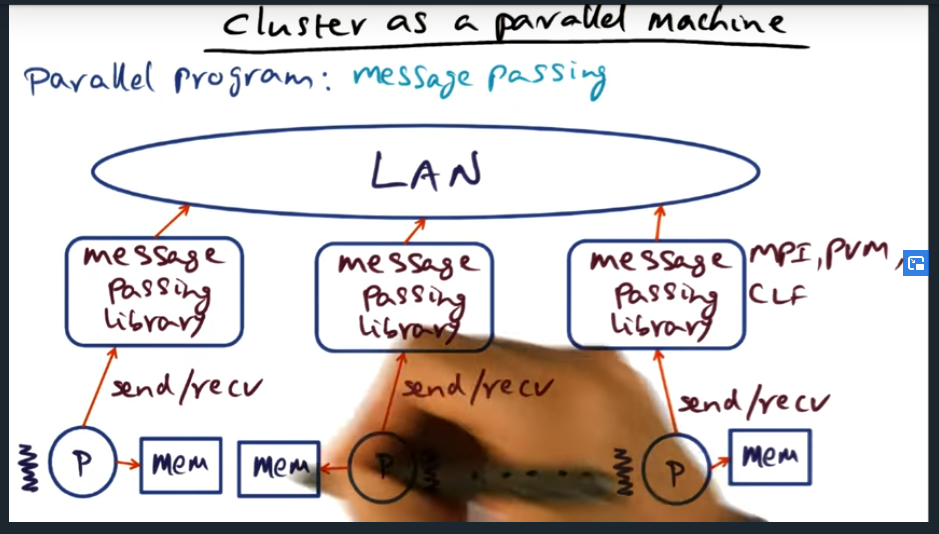

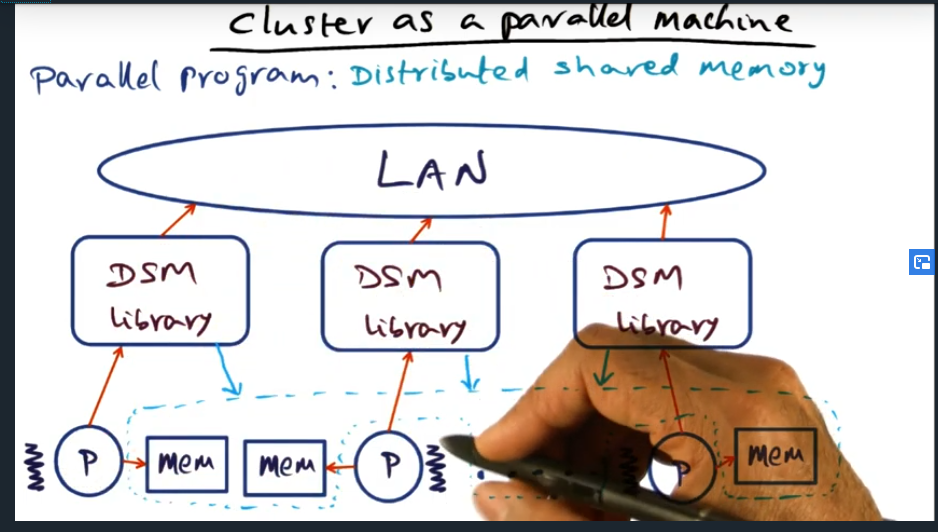

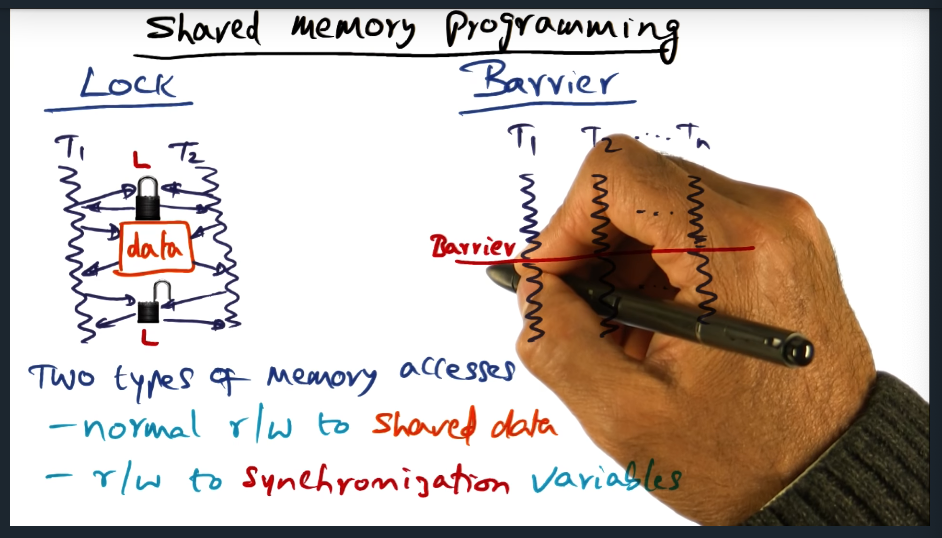

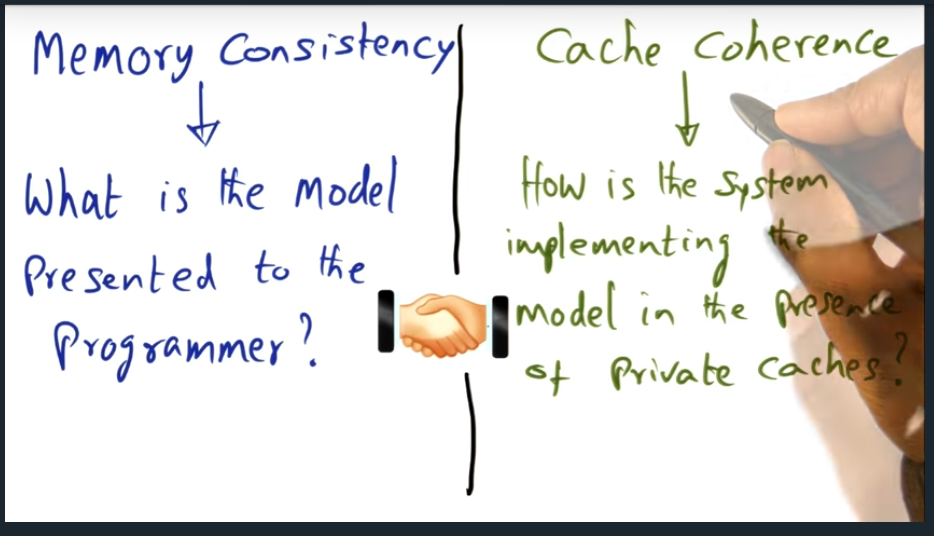

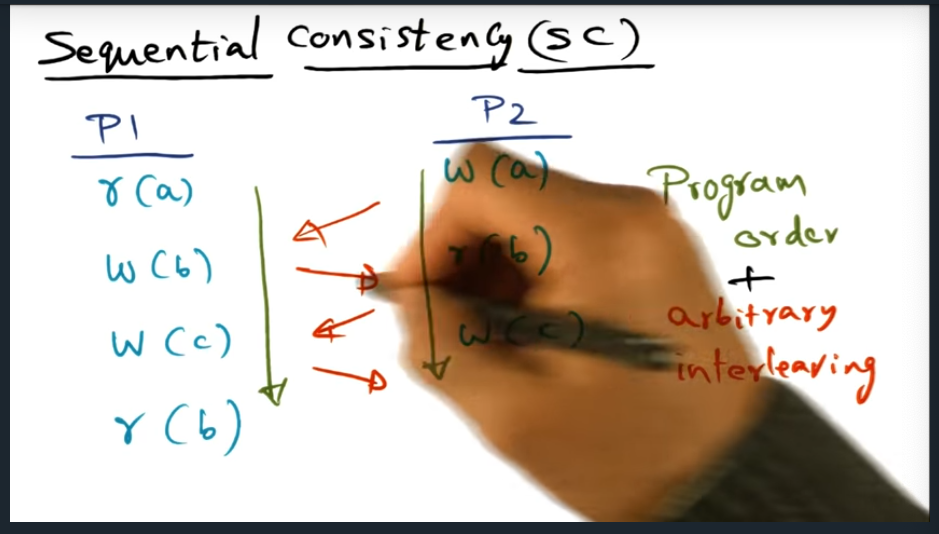

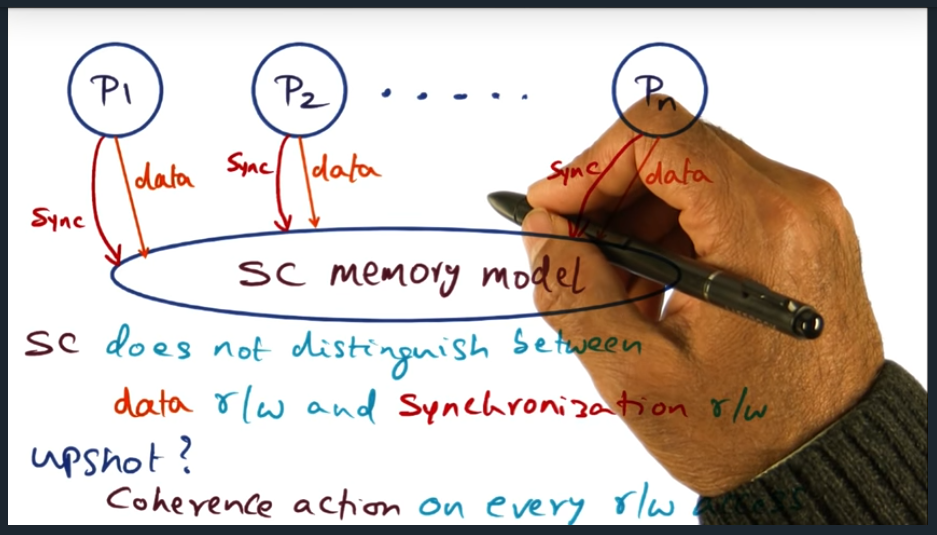

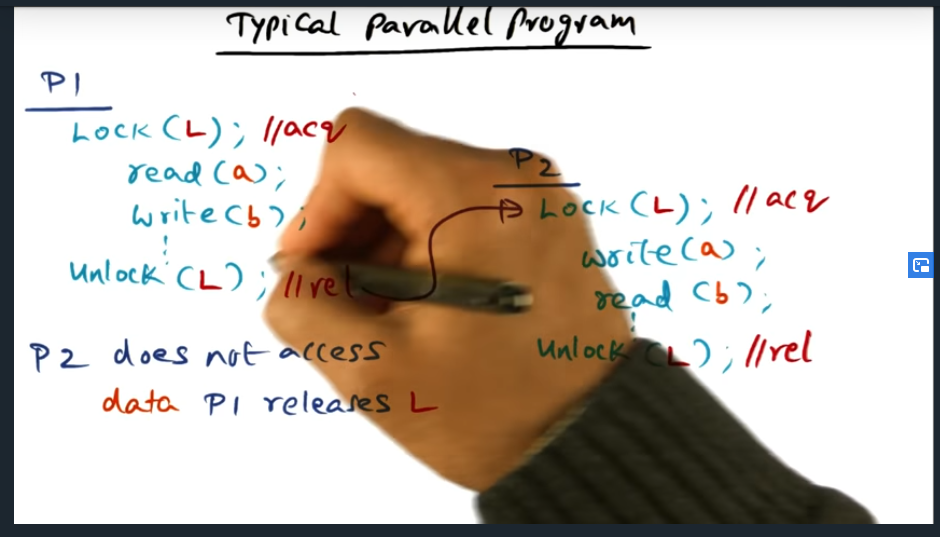

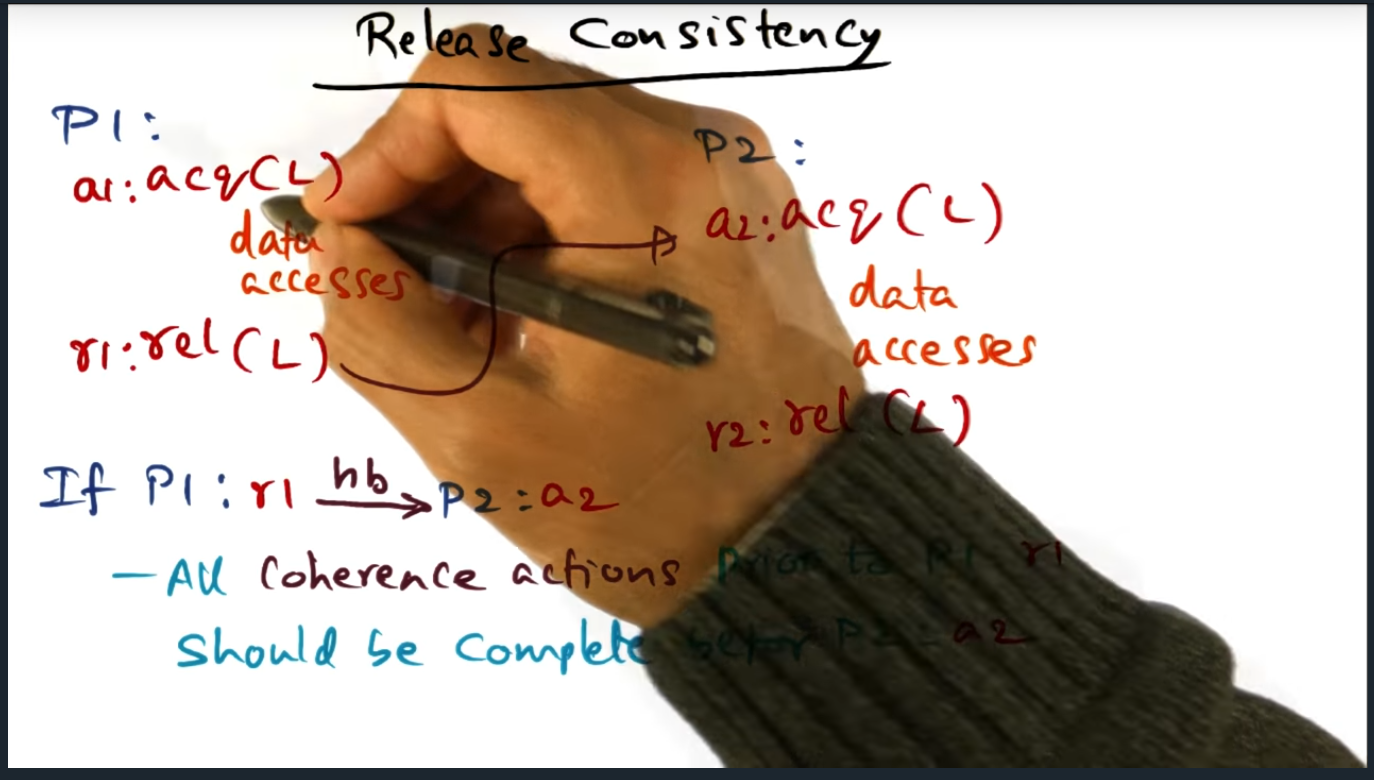

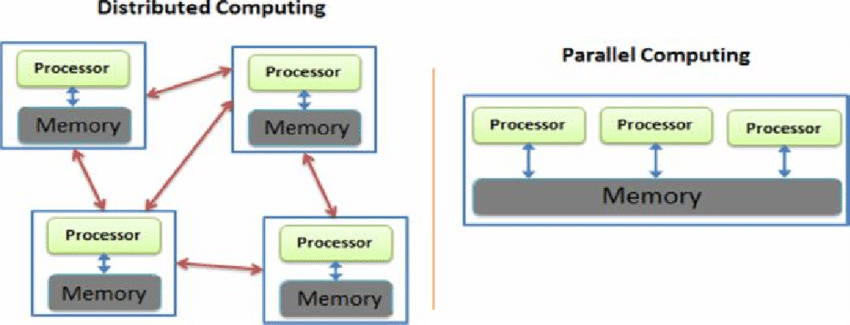

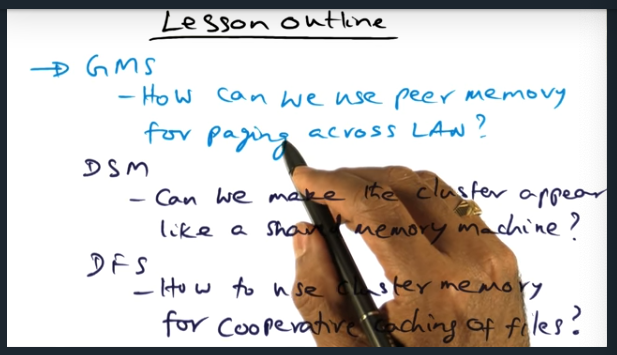

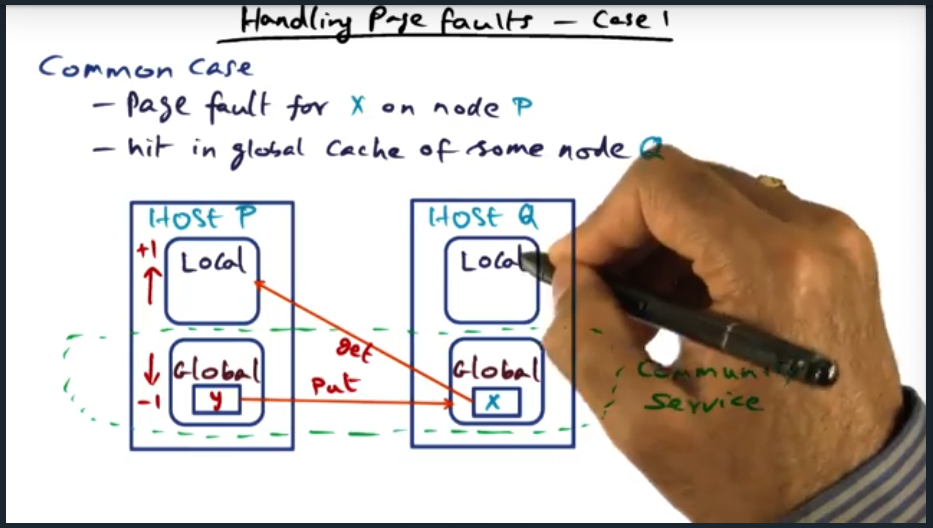

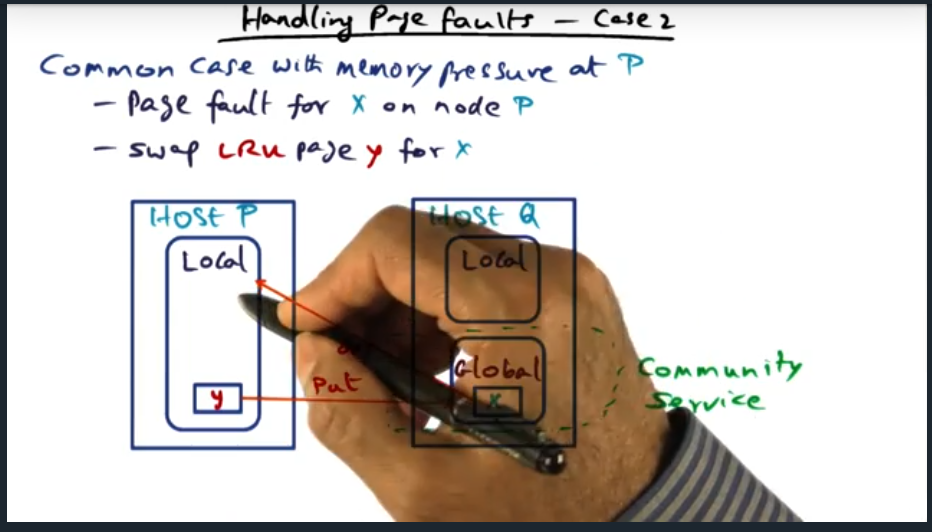

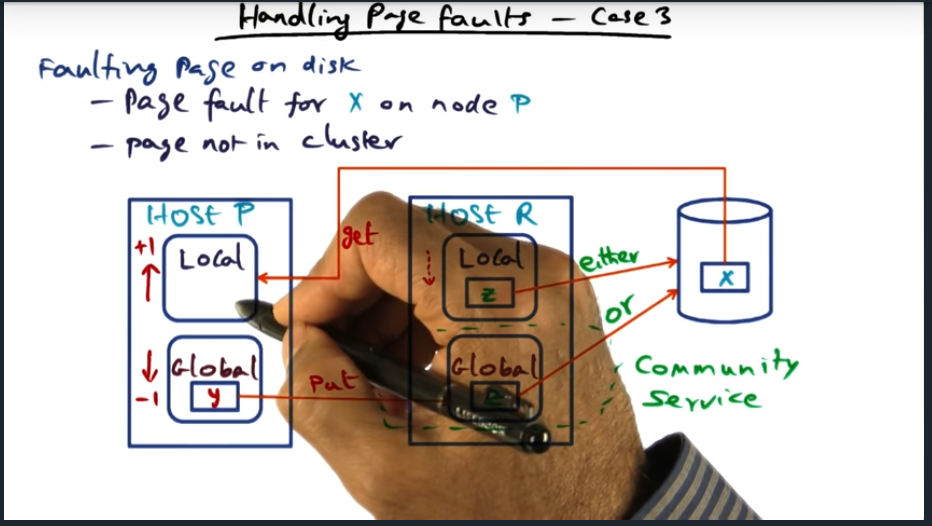

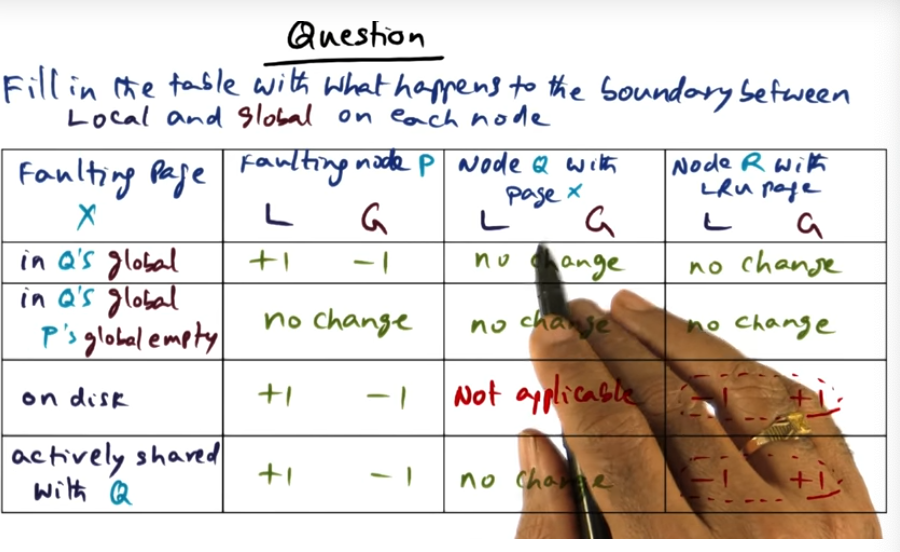

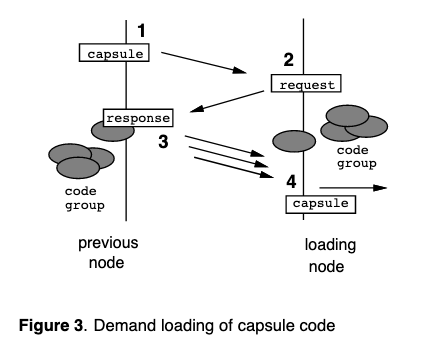

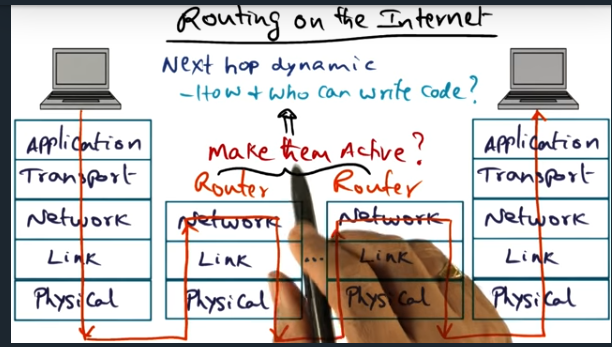

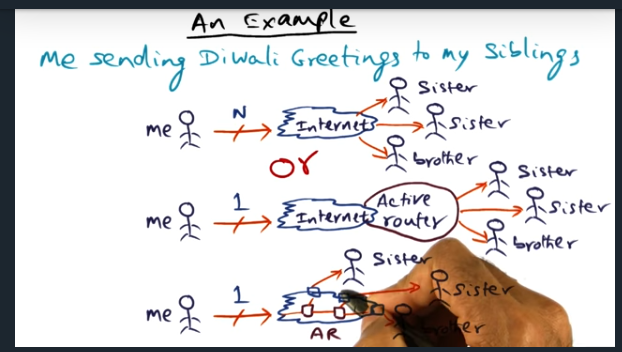

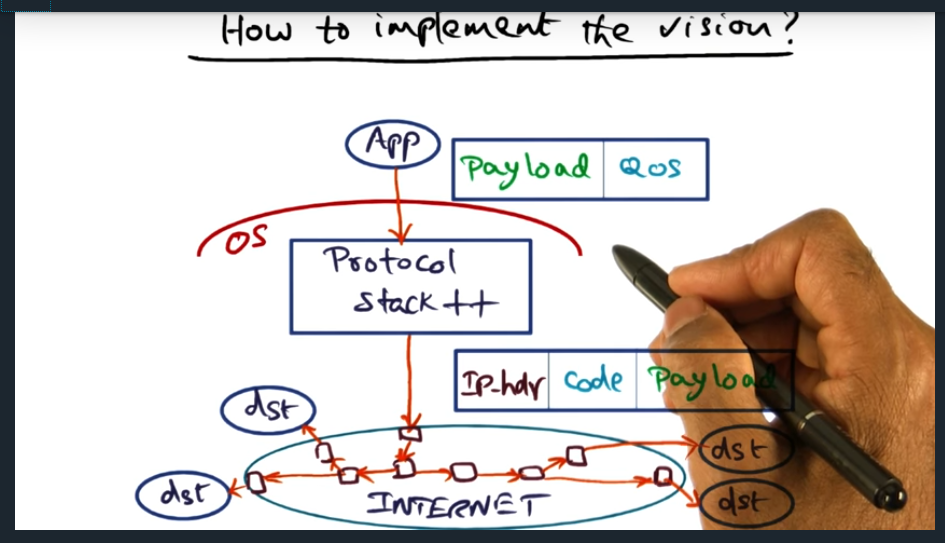

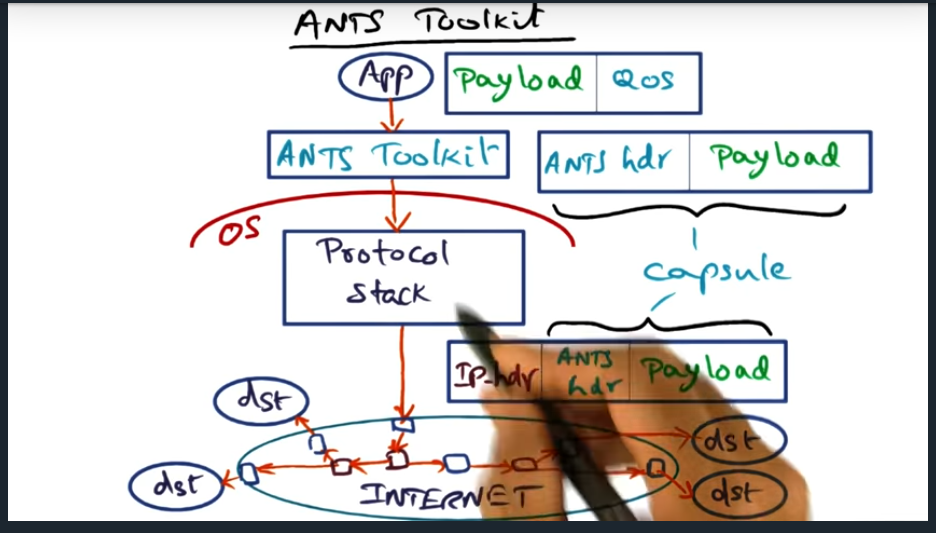

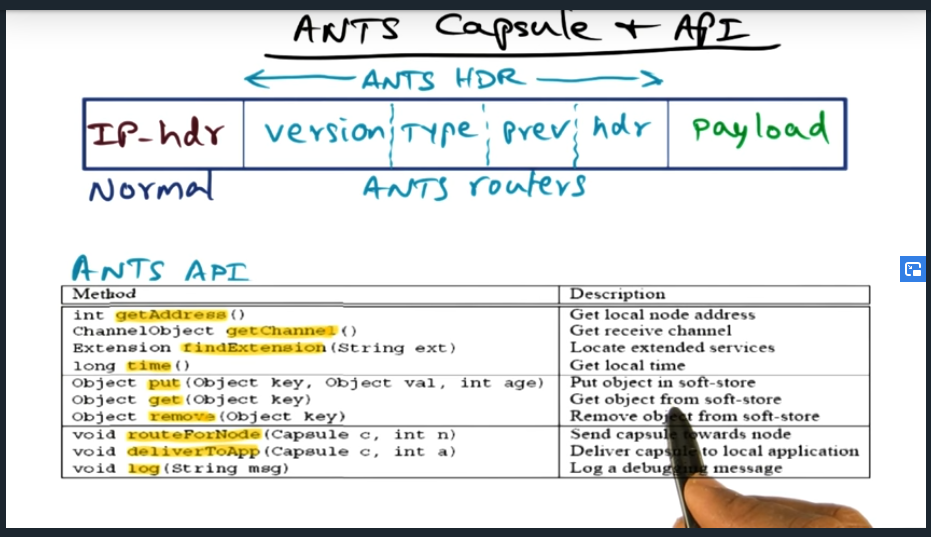

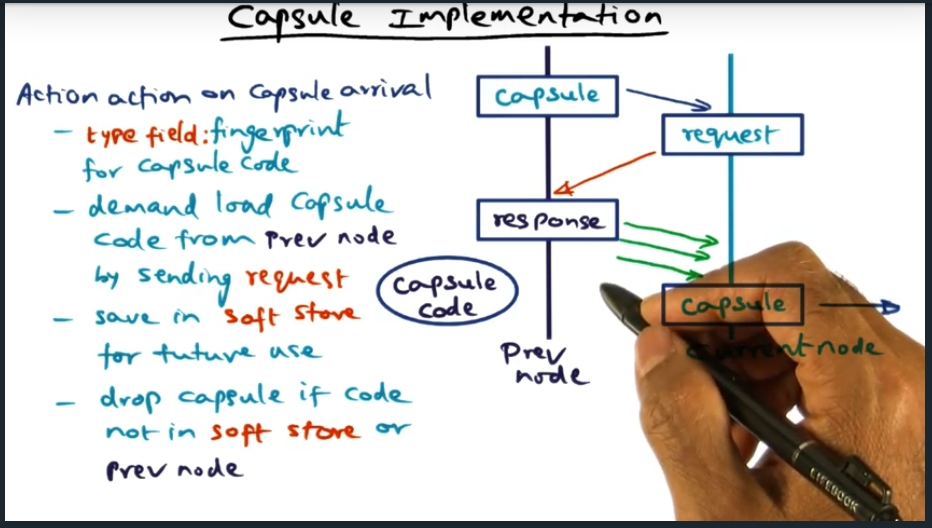

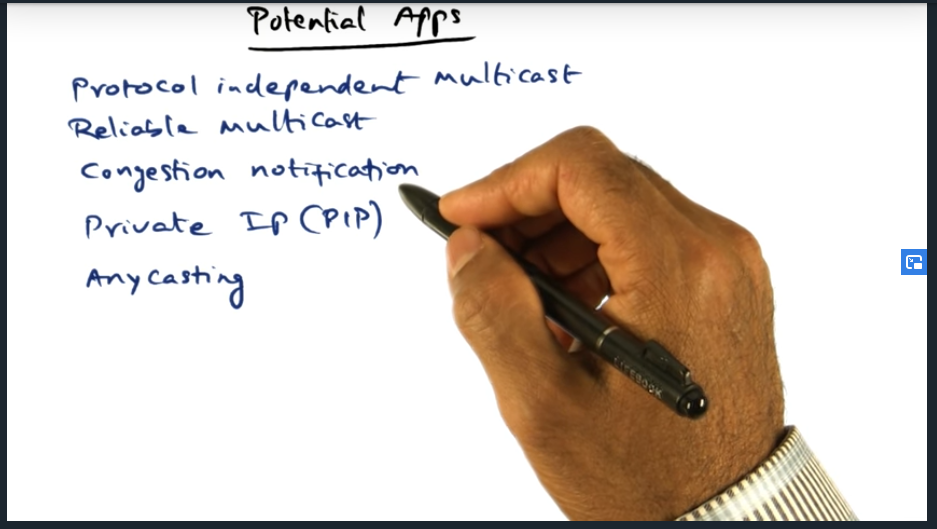

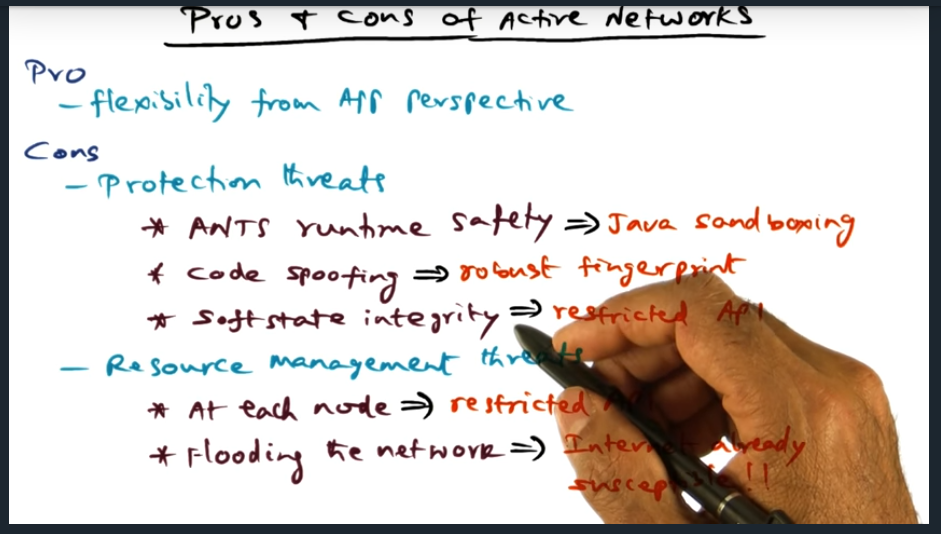

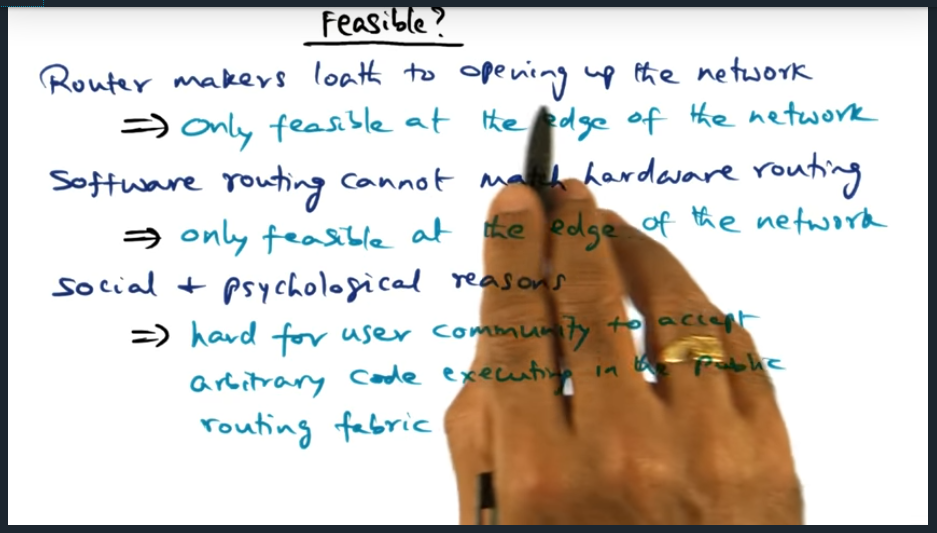

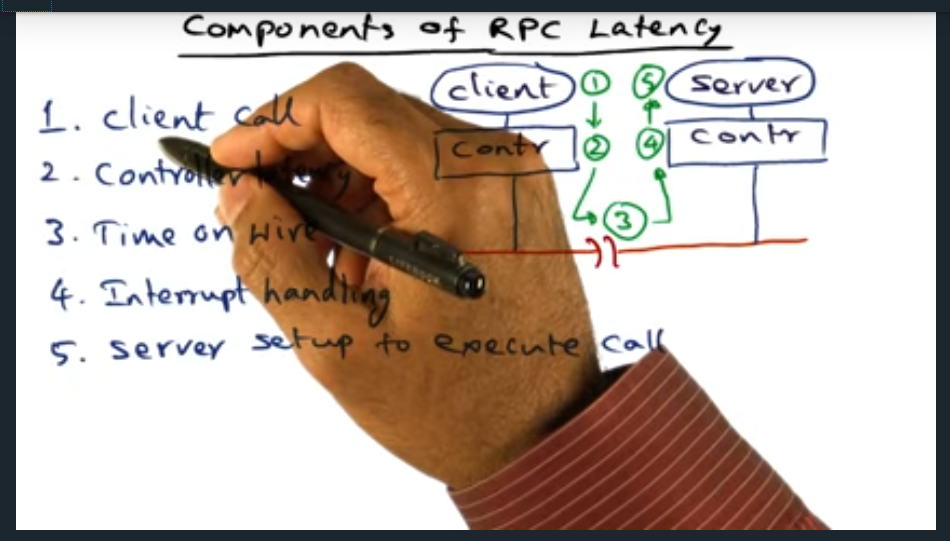

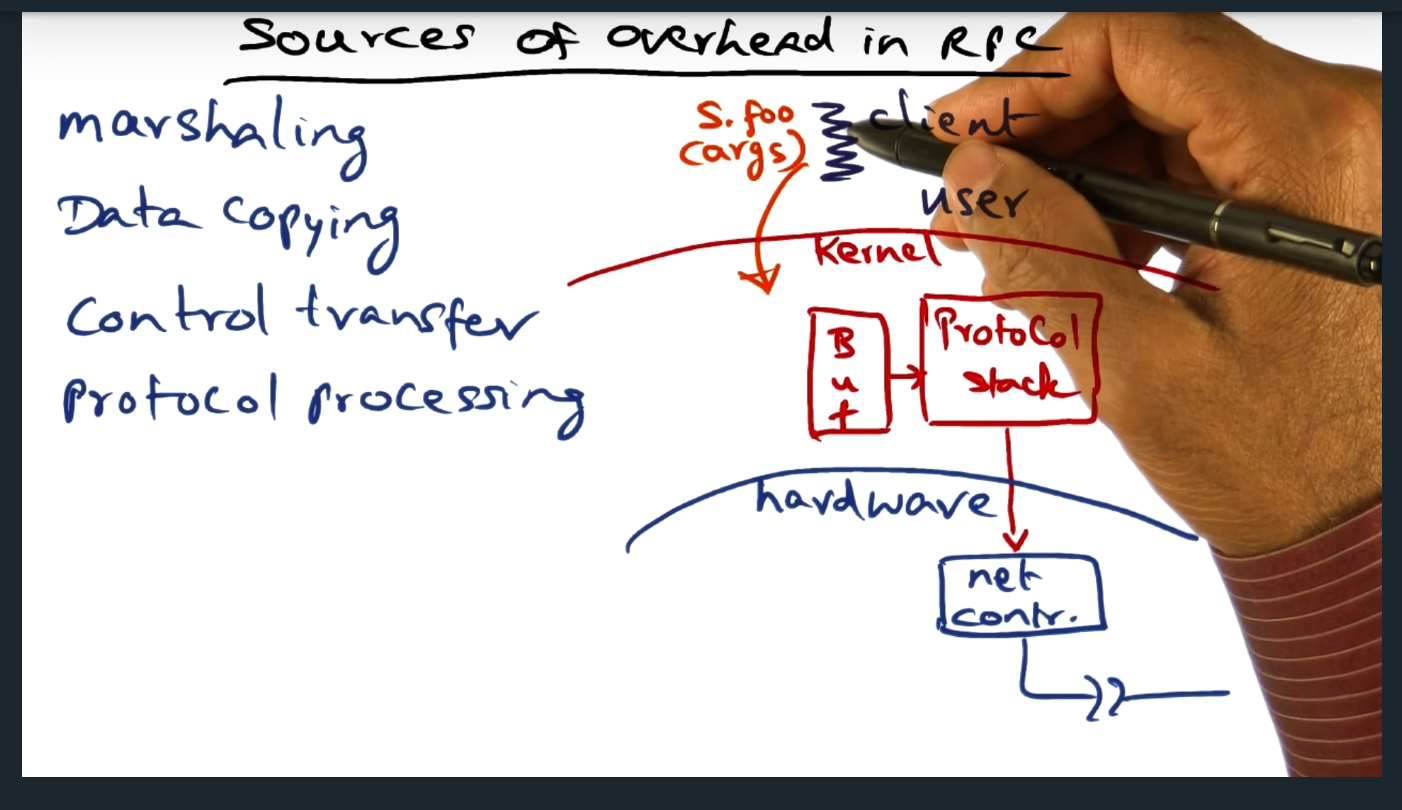

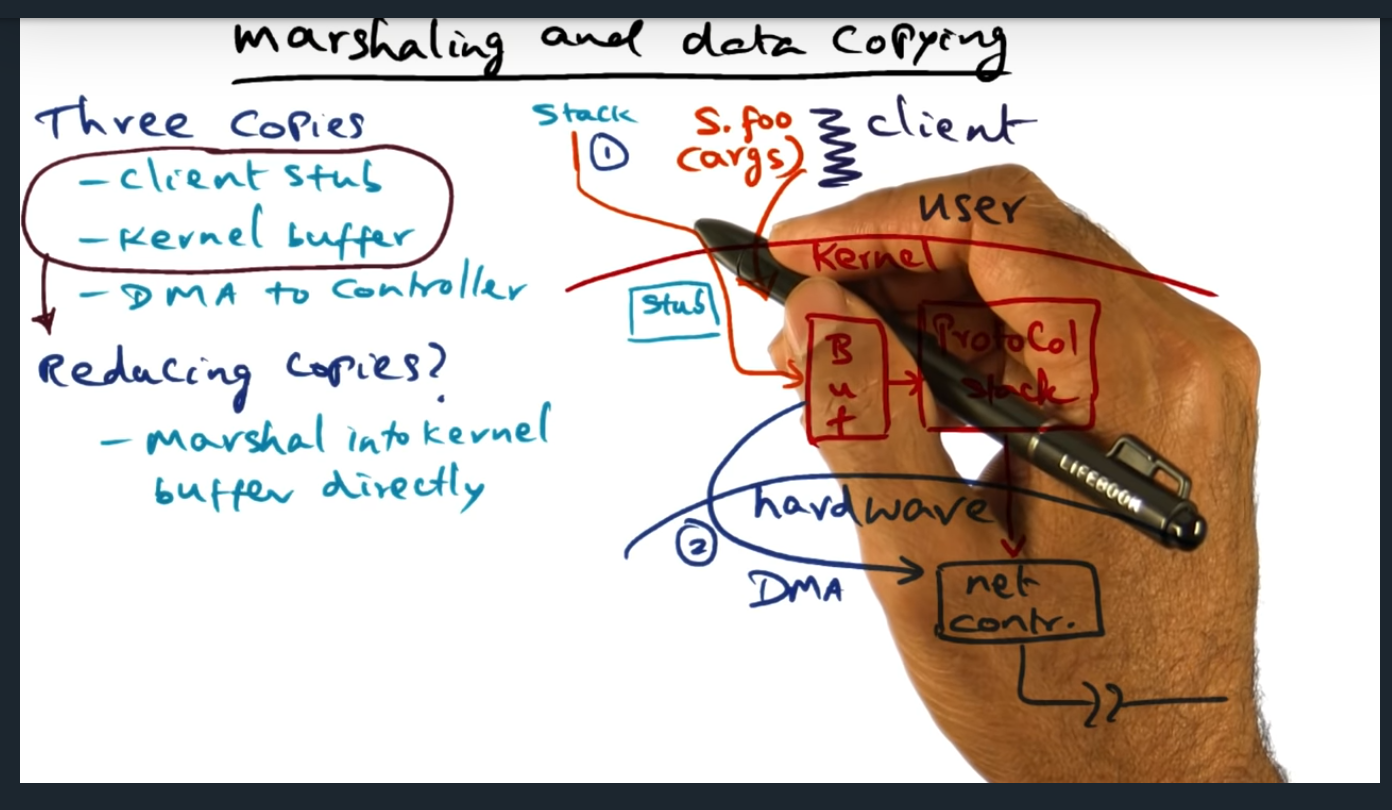

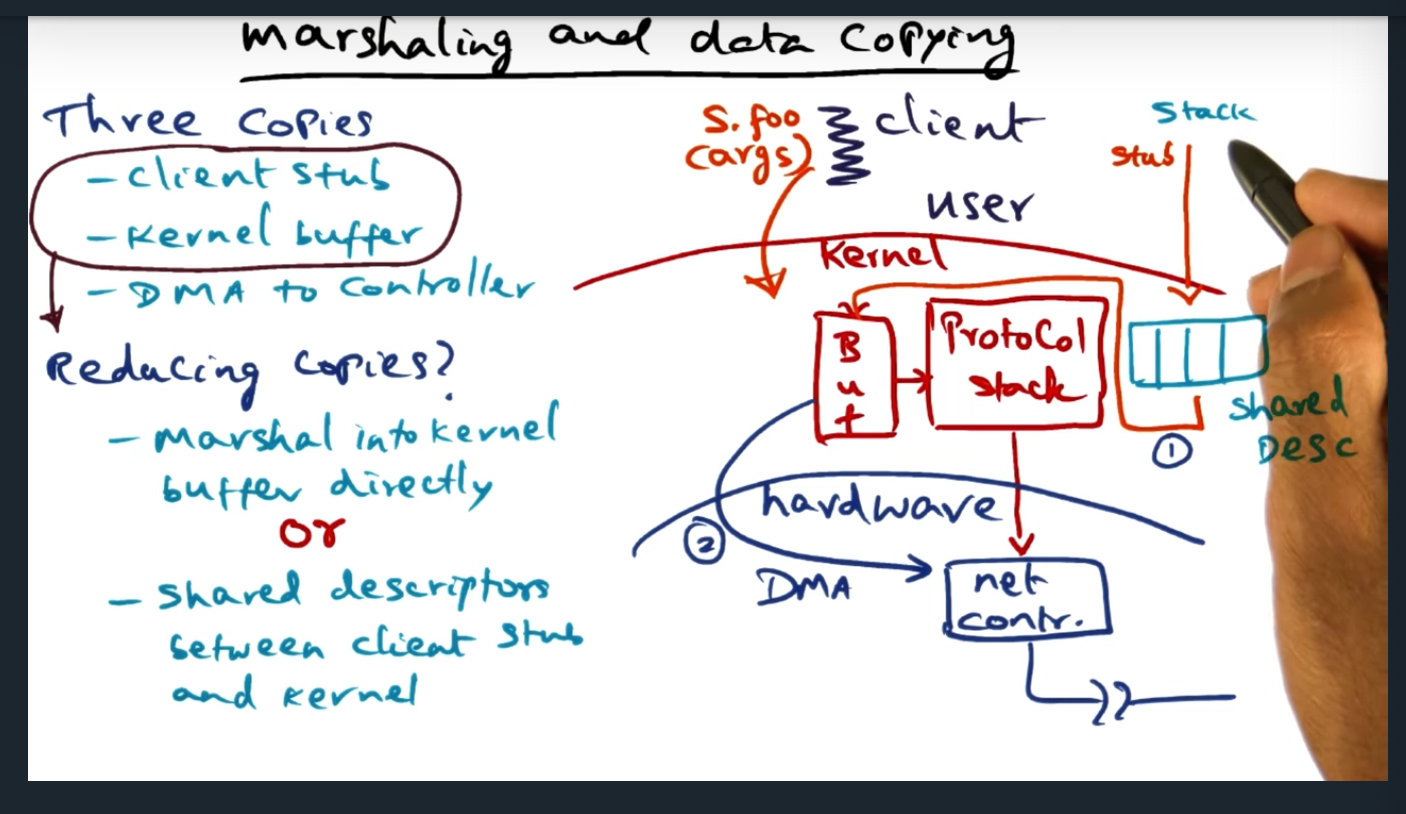

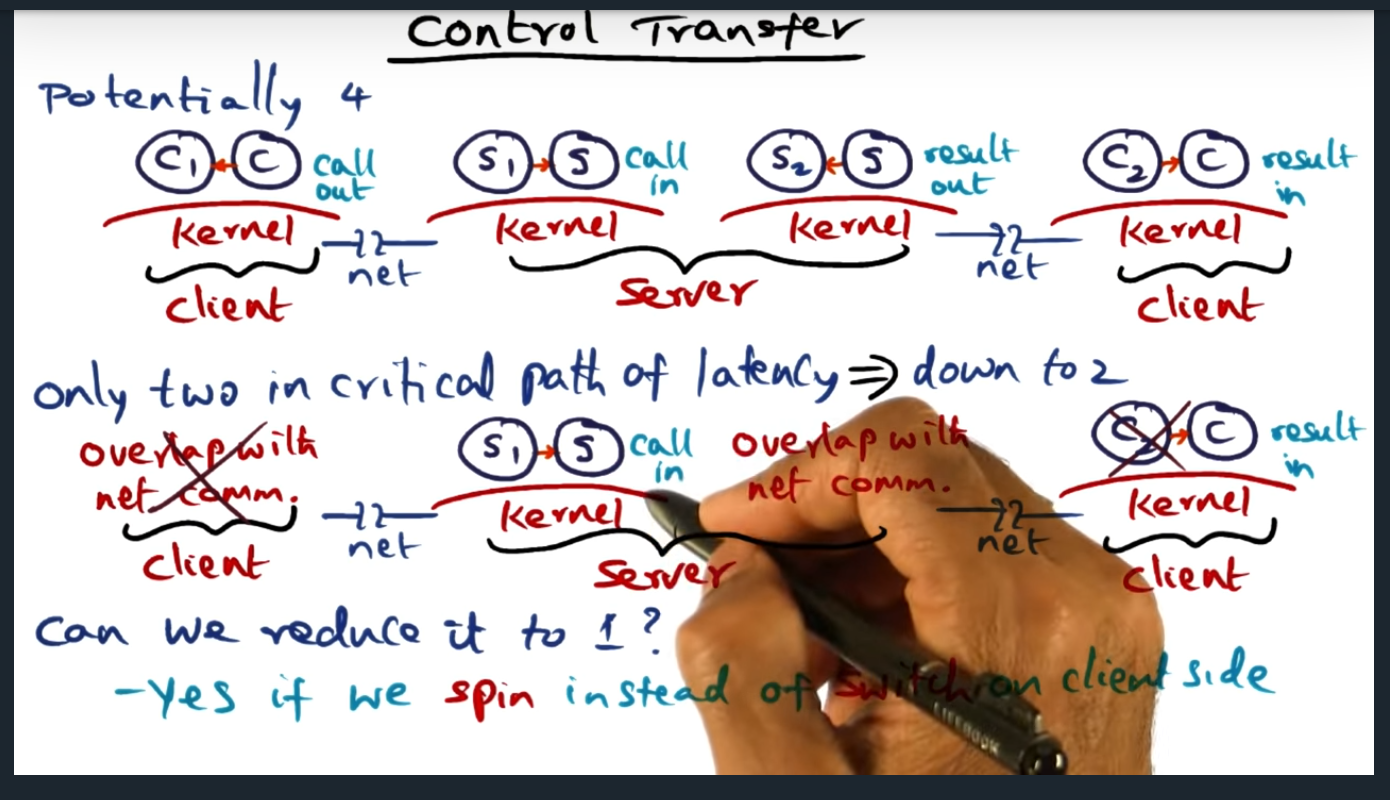

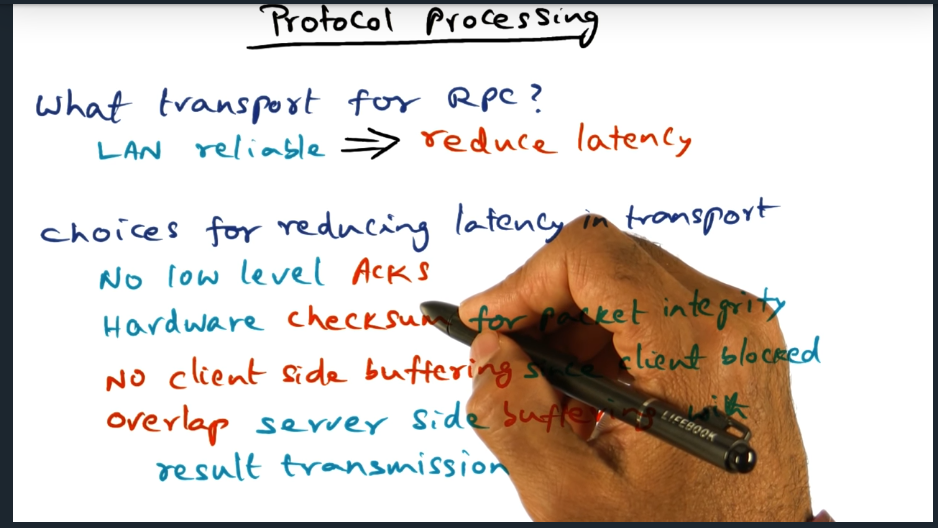

I’m compiling all my blog posts on “advanced operating systems refresher” into a single, nicely formatted e-book. The book will provide a summary and detailed notes on Udacity’s Advanced Refresher course.

Media Consumption

Watched the first two episodes of “This is Us”. Again, as I mentioned in my blog posts, the writer’s (and cast and crew) deserve a huge applaud for pivoting and incorporating two major events in history — the COVID-19 pandemic and police brutality on black lives — into the story line. That’s no easy feat but they are pulling it off.

Watched Borat subsequent film. I found the film hilarious and ingenious. Reveals how complicated people can be. For example, two trump supporters end up taking Borat into their homes and at one point, they even speak up on behalf of women.

Home Care

I’m super motivated keeping our new home in tip top shape. I had learned that the previous owner’s took a lot of pride in the house, the retired couple out in the front or back yard on a daily basis, the two of them maintaining the lawn and plants.

Learning how to take care of the lawn. That includes learning the different modes of mowing (i.e. mulching, side discharge, bagging), the difference between slow release and fast release nitrogen, the importance of aerating, the importance of applying winterizer two weeks before the last historical freeze day, how to edge properly and so on.

Family

Still working from home and still appreciate the little gestures from Jess throughout the day. I sometimes get lost in a black hole of thoughts and troubleshooting, not drinking any water or eating snacks for hours at a time. So the little snacks that Jess drops off go a long way.

Health

Although taking care of mental health, not so much physical health. Only exercised once last week which was basically jogging on the treadmill.

Graduate School

Applied theory of lightweight recoverable virtual machine to work. In advanced operating systems, I took the concept of the abort transaction and suggested that we install a similar handler in our control plane code.