To pass this class, you should

- digest everything written in Joves’s notes (he’s a TA and will release these notes gradually throughout the semester so pay close attention to his Piazza posts)

- join or form a study group of a handful of students

- dedicate at least 20+ hours per week to drill, memorize, and apply algorithms

- complete all the homework assignments, (easy) project assignments, and quizzes (these are all easy points and you’ll need them given that exams make up 70% of your final grade)

- drill ALL the practice problems (both assigned and extra ones published on the wiki) over and over again until you’ve memorized them

Almost failing this class

This class kicked me in the ass. Straight up. Words can barely described how relieved I feel right now; now that the summer term is over, my cortisol levels are finally returning to normal levels.

I’m not exaggerating when I say I teared up when I learned that I received a passing grade. I barely — and I mean barely (less than 1%) — passed this class with a B, a 71%. Throughout the last week of the summer semester, while waiting for the final grades to be published on Canvas, I had fully prepared myself (both mentally and emotionally) for repeating this class, level of anxiety and stress I haven’t felt throughout the last 3 years in the OMSCS program.

Other students in the class felt the same level of despair. One other student shared that he has:

never felt that much pressure and depression from a class in [his] entire academic career.

One other student definitely did not hold back any punches on Piazza:

I am going to open up a new thread after this course finishes out. I am tired of the arrogant culture that is in this program and specifically in this course! There is a lack of trying to understand other perspectives and that is critical for creating a thriving diverse intellectual community.

So yes — this course is difficult.

All that being said, take my review with a pinch of salt. Other reviewers have mentioned that you just need to “put in the work” and “practice all the assigned and wiki problems”. They’re right. You do need to do both those things.

But the course may still stress you out; other courses in the program pretty much guarantee that you’ll pass (with an A or B) if you put in x number of hours; this doesn’t apply for GA. You can put in all the hours and still not pass this class.

Before getting into the exam portion of my review, it’s worth noting that the systems classes I mentioned above play to my strengths as a software engineer building low level systems; in contrast, graduate algorithm predominately focuses on theory and is heavy on the math, a weakness of mine. Another factor is that I’ve never taken an algorithmic course before, so many of the topics were brand spanking new to me. Finally, my mind wasn’t entirely focused on this class given that I had quit my job at FAANG during the first week this class started.

Okay, enough context. Let’s get into discussing more about the exams.

Exams

As mentioned above, do ALL the practice problems (until you can solve them without thinking about it) and really make sure you understand everything in Joves’s notes. I cannot emphasize these two tips enough. You might be okay with just working the assigned practice problems but I highly recommend that you attempt the homework assignments listed on the wiki since questions from the exam seem to mirror (almost exactly) those questions. And again, Joves’s notes are essentially since he structures the answers in the same way they are expected on the exam.

Exam 1

Exam 1 consists of 1) dynamic programming and 2) Divide and Conquer (DC)

Read the dynamic programming (DP) section from the DPV textbook. Practice the dynamic programming problems over and over and over again.

Attempt to answer all the dynamic programming (DP) problems from both the assigned practice problems and all the problems listed on the wiki. Some other reviewers suggest only practicing a subset of these problems but just cover your bases and practice ALL of practice problems — over and over again, until they become intuitive and until you can (with little to no effort) regurgitate the answers.

For the divide and conquer question, you MUST provide an optimal solution. If you provide a suboptimal solution, you will be dinged heavily: I answered the question a correct solution but was O(n) and not O(logn), I only lost half the points. A 50%. So, make sure you understand recursion really well.

Exam 2

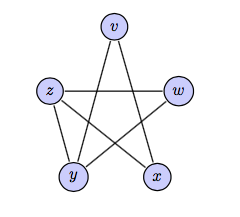

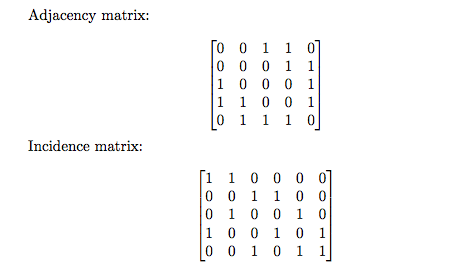

Exam 2 focuses on graph theory. You’ll likely get a DFS/Dijkstra/BFS question and another question that requires you understand spanning trees.

The instructors want you to demonstrate that you can use the algorithms as black boxes (no need to prove their correctness so you can largely skip over the graph lectures). That is, you must understand when/why to use the algorithms, understand their inputs and outputs, and memorize their runtime complexity.

For example, given a graph, you need to find out if a path exists from one vertex to another.

To solve this problem, should know explore algorithm like the back of your hand. You need to know that the algorithm requires both an undirected (or directed) graph and a source vertex as inputs. And the algorithm returns a visited[u] array, each entry set to True if such a path exists.

That’s just one example. There are many other algorithms (e.g. DFS, BFS, Krushkal’s MST) you need to memorize. Again, see Joves’s notes (recommendation #1 at the top of this page). Seriously, Joves, if you are reading this, thanks again. Without your notes, I would 100% have failed the course.

Exam 3

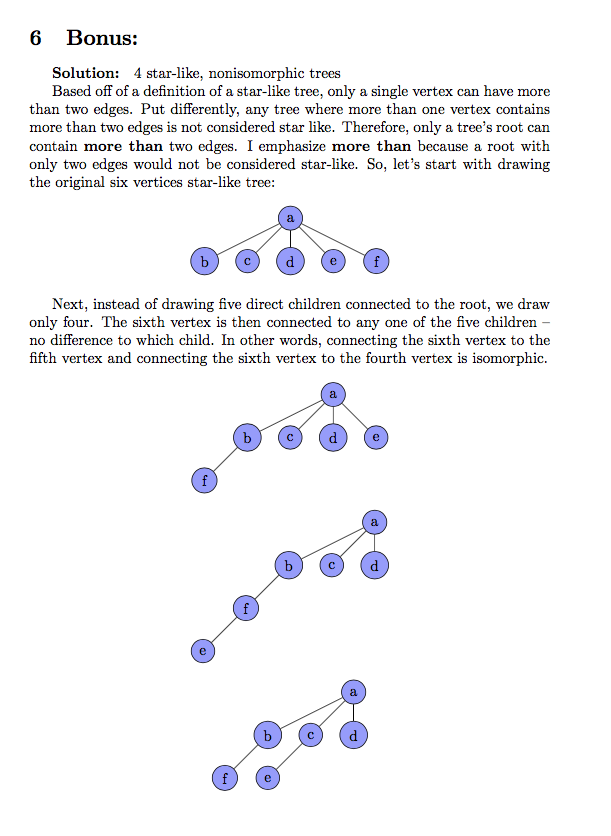

Understand the difference between NP, NP Hard, NP-Complete.

I cannot speak much to the multiple choice question (MCQ) since I bombed this part of the exam. But I did relatively well on the single free-form question, again, thanks to Joves’s notes. Make sure that you 1) Prove that a problem is in NP (i.e. solution can be verified in polynomial time) and 2) You can reduce a known NP-Complete problem to this new problem (in that order — DO NOT do this backwards and lose all the points).

Summary

Some students will cruise this class. You’ll see them on Piazza and Slack, celebrating their near perfect scores. Don’t let that discourage you. Most of students find this topic extremely challenging.

So just brace yourself: it is a difficult course. Put the work in. You’ll do fine. And I’ll be praying for you.