Another installment of my weekly reviews. I think the practice of carving out around 30 minutes on Sunday to look back at the previous week and look forward for the next week helps me in several ways. First, the posts help me recognize my tiny little victories that I often neglect and they also help me appreciate everything in my life that are easy to take for granted (like a stable marriage and healthy children and a roof over our heads and not having to worry about my next pay check). Second, these weekly rituals tend to reduce my anxiety, giving me some sense of control over the upcoming week that will of course not go according to plan.

Looking back at last week

Writing

- Published 6 daily reviews this past week

- Published post on how to convert iPhone images from .heic to .jpeg

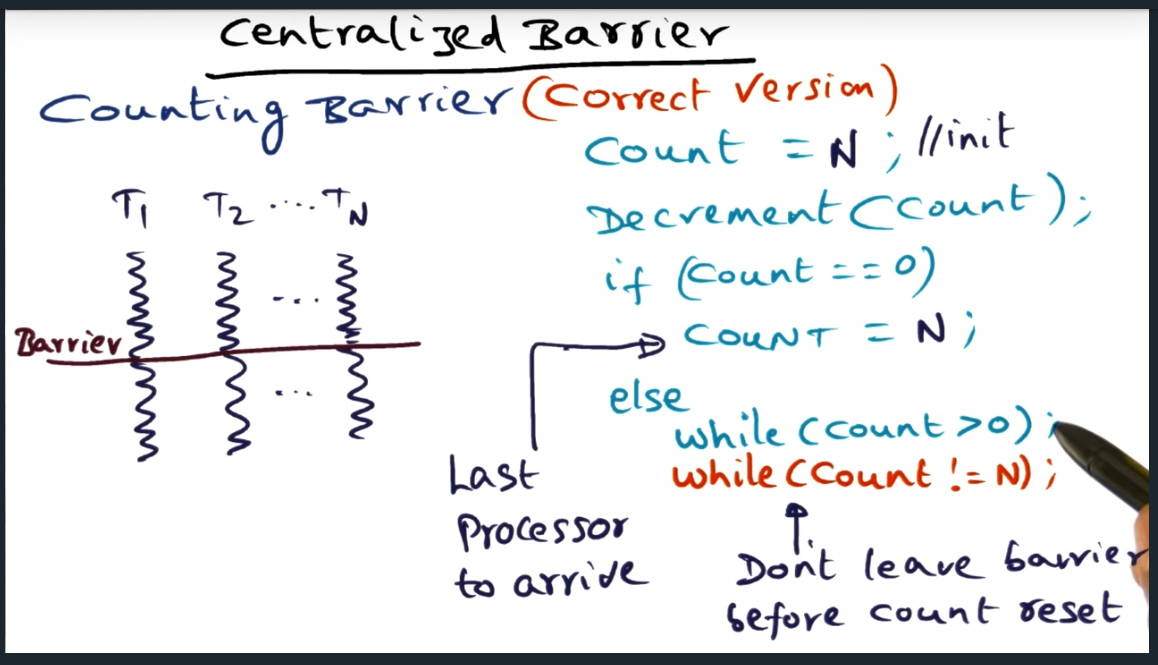

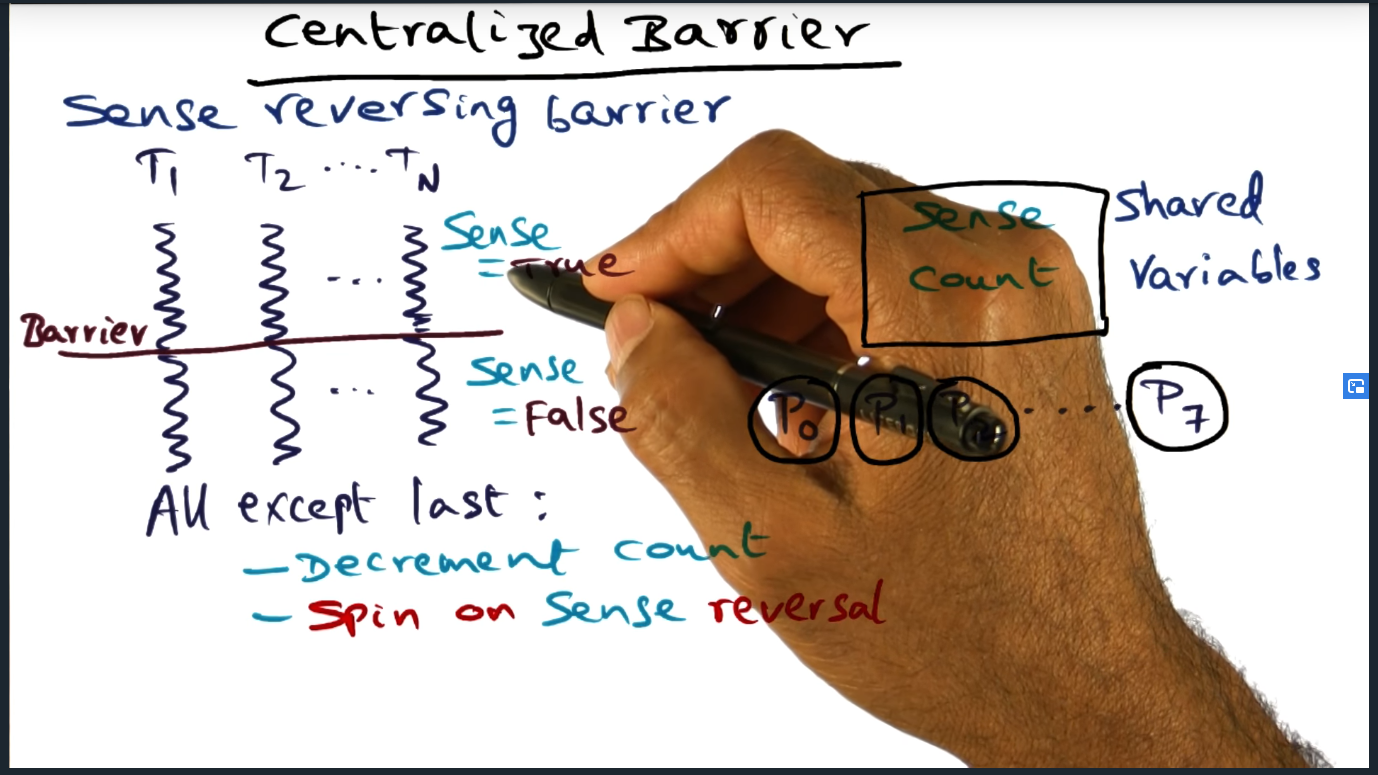

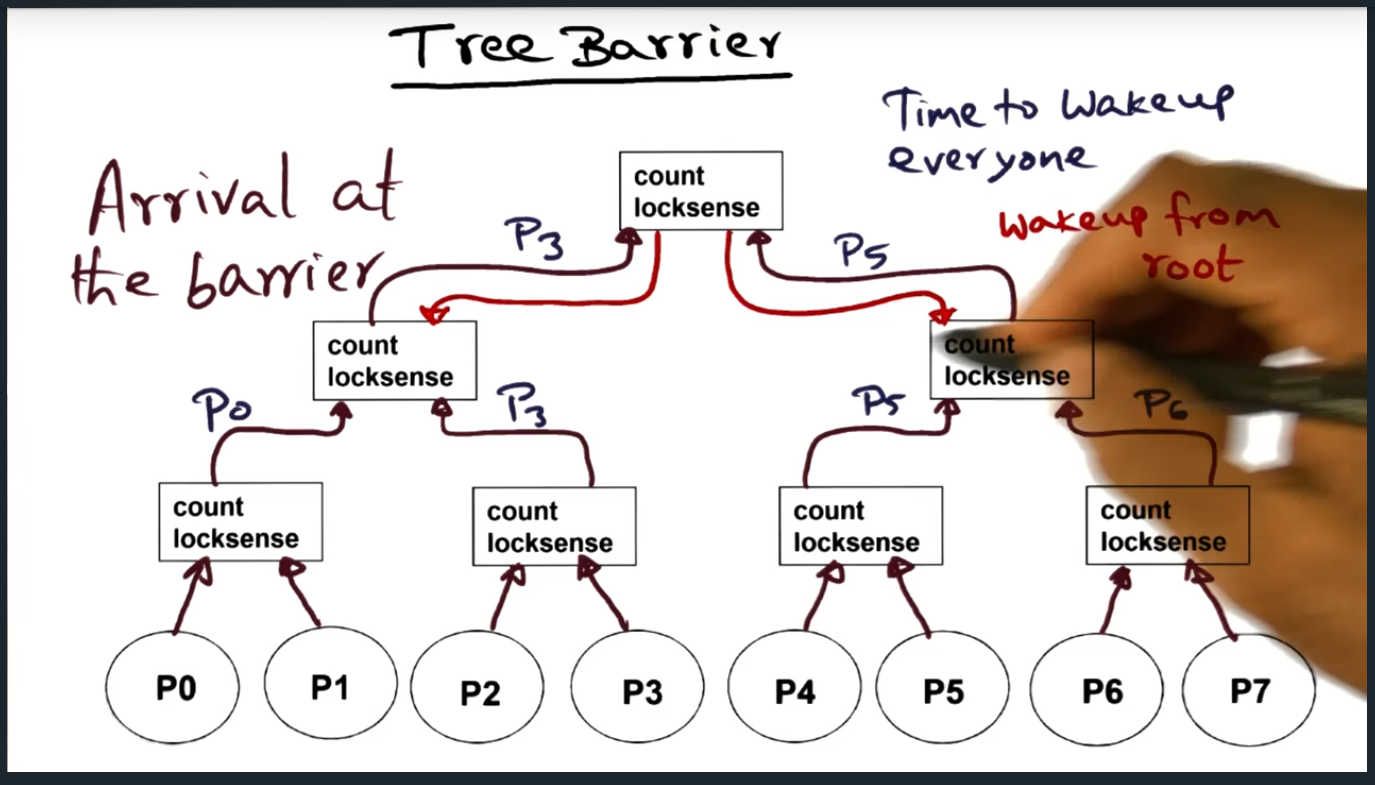

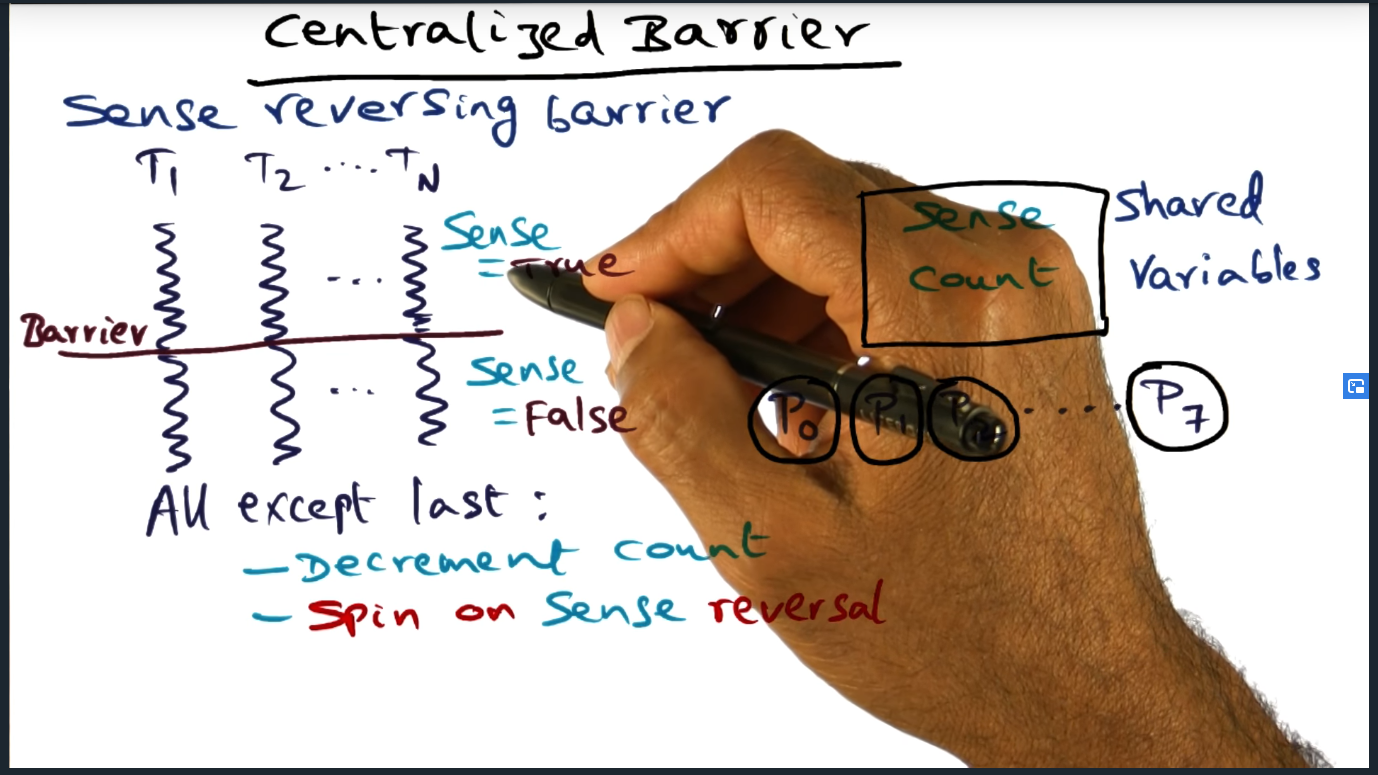

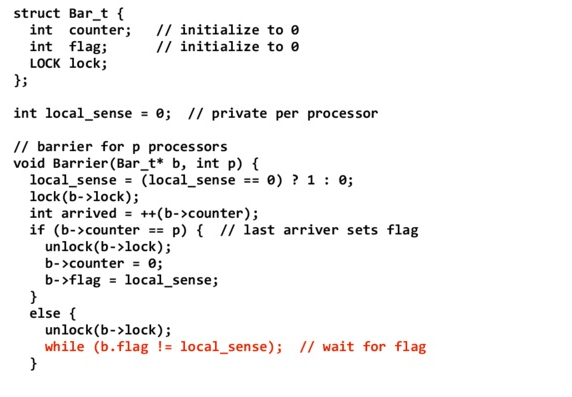

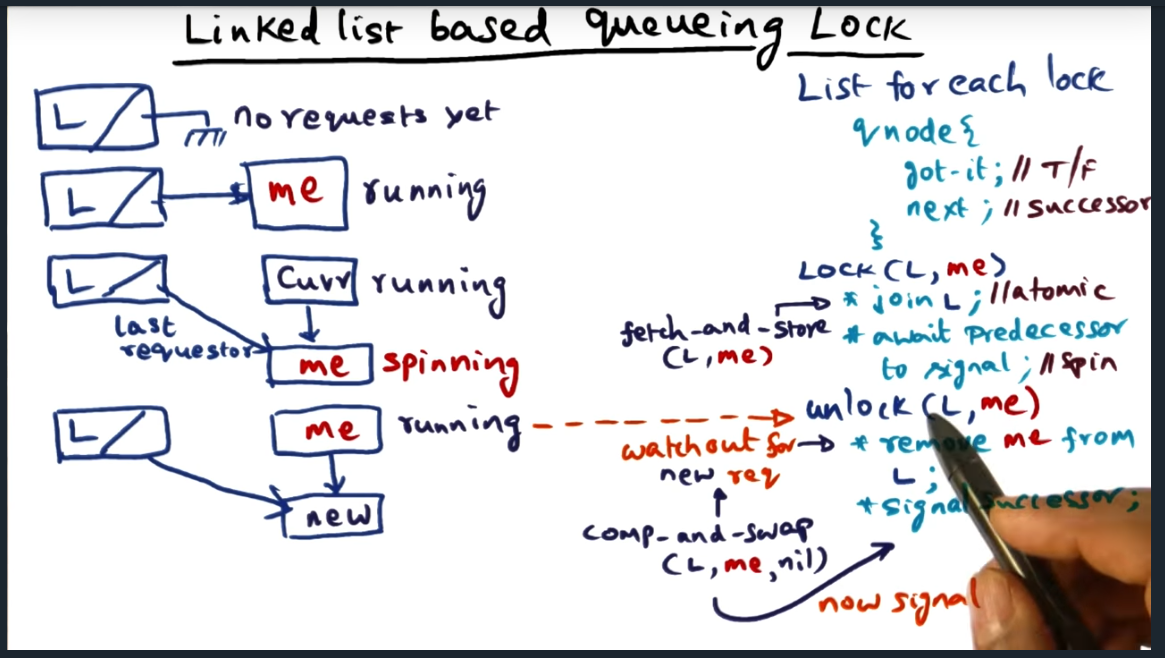

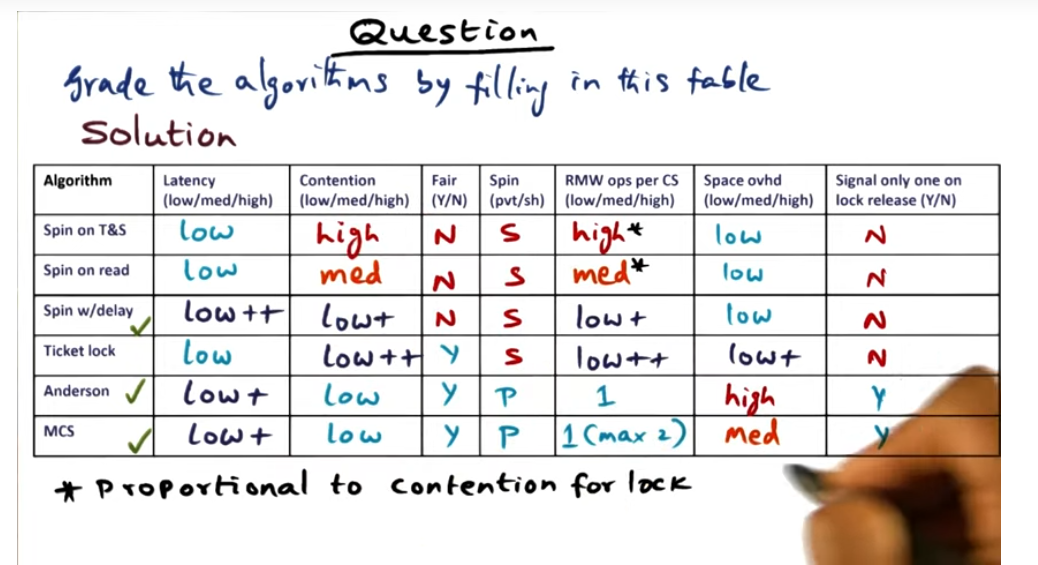

- Published notes on barrier synchronization and mutual exclusion synchronization

Things I learned

- How static asserts are great way to perform sanity checks during compilation and are a great way to ensure that your data structures fit within the processor’s cache lines

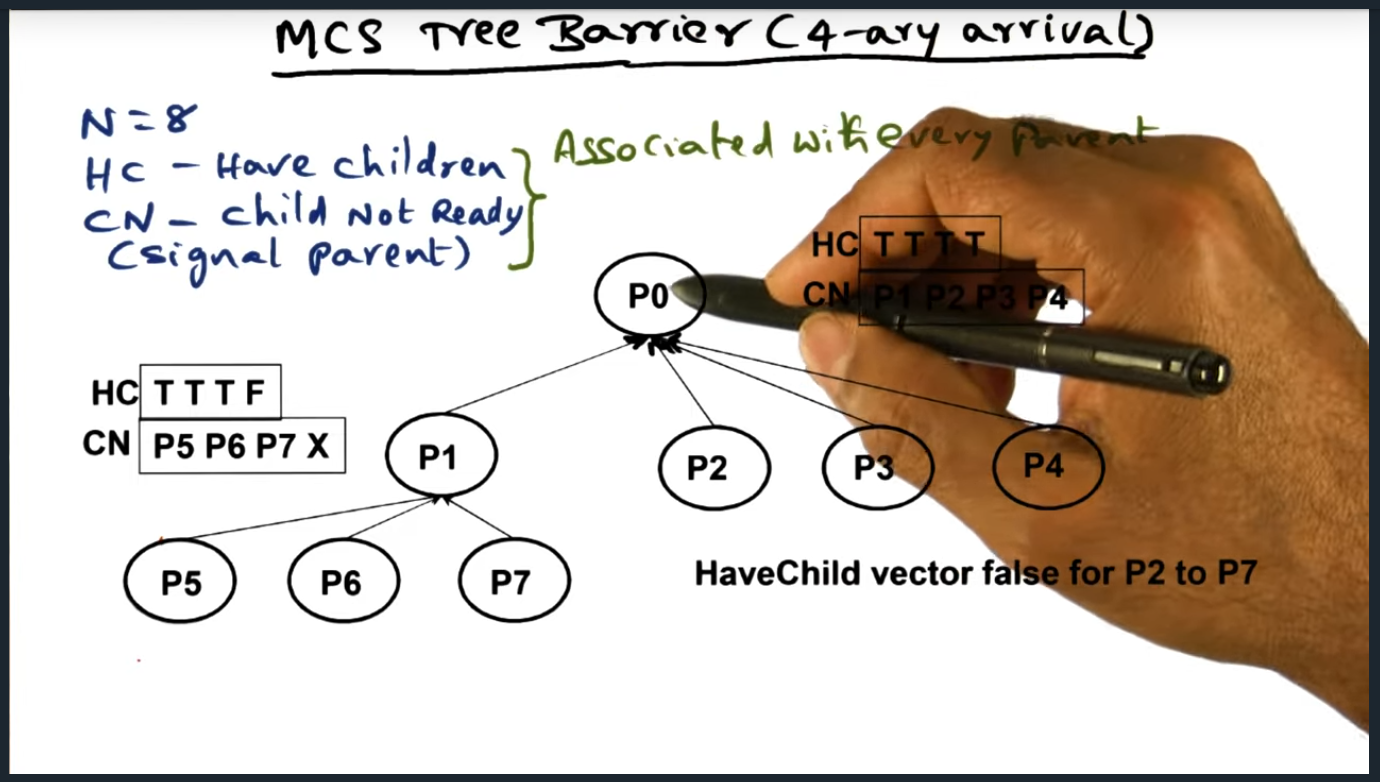

- Learned a new type of data structure called a n-ary tree (used for the MCS tree barrier)

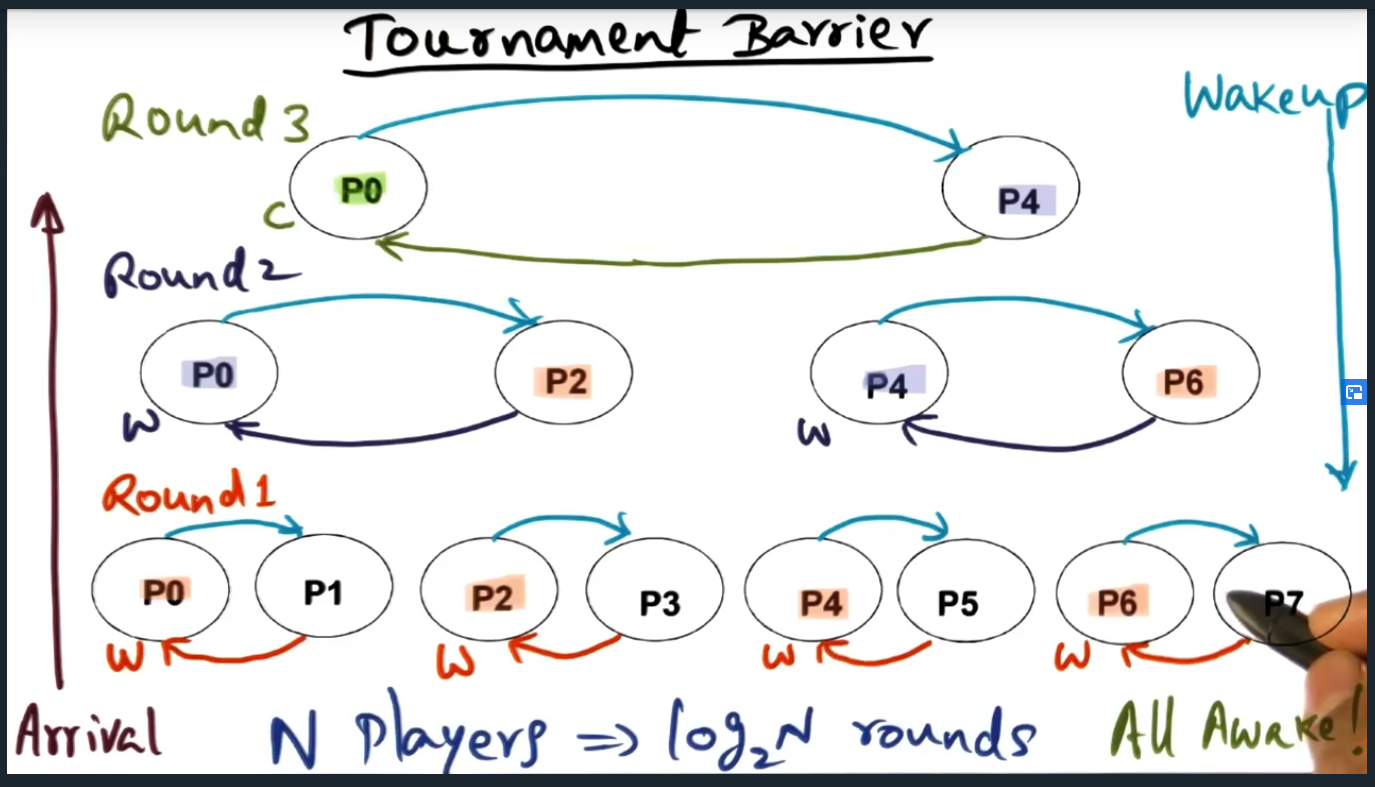

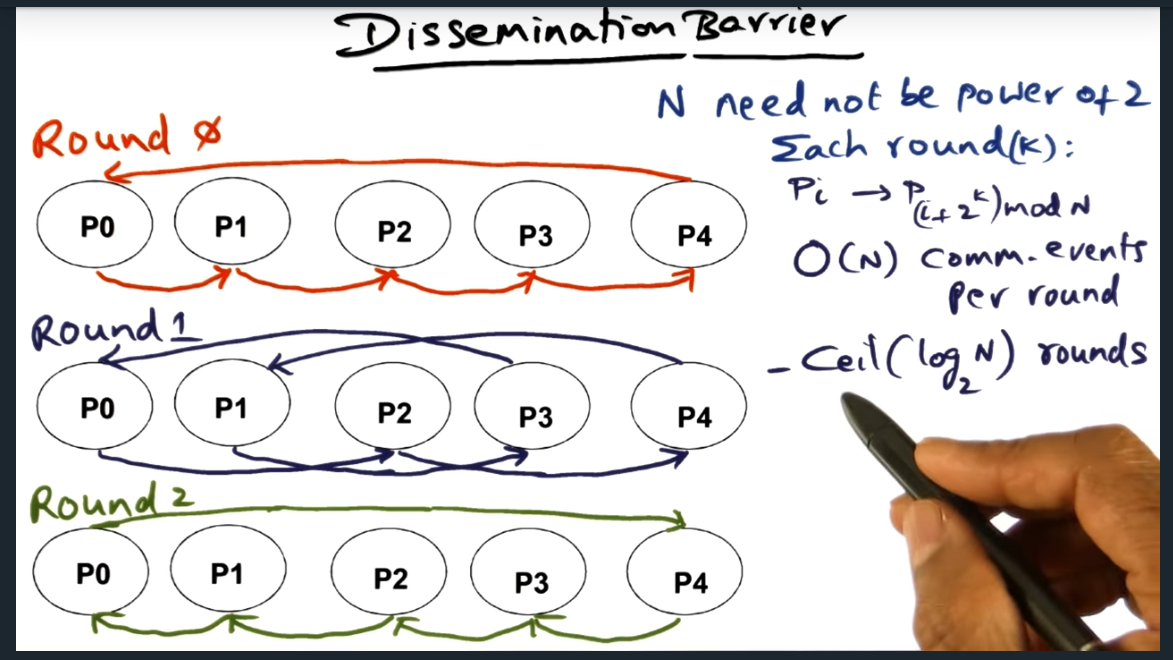

- Learned about the different ways to implement mutual exclusion and ways to implement barrier synchronization (thanks advanced operating systems course)

- Read C code at work that helped sharpen my data structure skills since I saw first hand how in production code we trade off space for performance by creating a index that bounds the range for a binary search

Family and Friends

- Taught Elliott had to shake her head (i.e. say “No”). Probably the biggest mistake this week since she’s constantly shaking her head (even though she’s trying to say “yes”). Does teaching her how to touch her shoulders balance out teaching her how to say no?

- Finally was able to book Mushroom an appointment to get her groomed (with COVID-19, Petsmart grooming was closed for time). I felt pretty bad and even tried cutting parts of her fur myself because patches of her hair were getting tangled and she was itching at them and giving herself heat rashes

- Chased Elliott around the kitchen while trying to feed her spoonfuls of broccoli and potato that Jess cooked up for her. I believe the struggle of Elliott not sitting still during lunch is karma, payback for all the times when I was her age and made my parents chase me around

- Watched several episodes of Fresh off the boat while eating dinner with Jess throughout the week. We are really enjoying this show and I find the humor relatable as a Vietnamese American man that grew up around the same time frame of the show.

- Video chatted with some old familiar faces and these social interactions were actually the best parts of my day. I need to do this more: reach out to people and just play catch up

- Packed packed packed and sorted out administrative stuff like printing out statements proving the transaction from Morgan Stanley to Wells Fargo and obtaining home insurance and reading through contracts for the new house that we’ll be moving into in less than 2 weeks

Mental and Physical Health

- Attended my weekly therapy session and was comforted when my therapist shared that he was like me in the sense that we often take on work that just needs to get done, and this similarity between the two of us may explain why tensions built up between the two of us the previous week

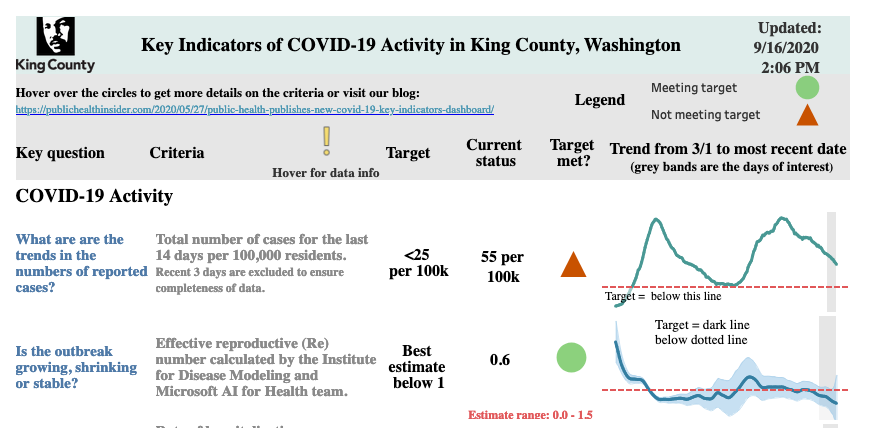

- Did not exercise at all really because of the wildfire smoke blanketing the pacific north west. Luckily, yesterday the weather cleared up so I will take advantage of the fresh air

- Got a hair cut. Originally, I categorized getting a hair cut underneath the miscellaneous section but upon reflection, I find grooming oneself and taking care of our physical appearance (not in vein) actually positively impacts our mental health (or negatively if we don’t treat ourselves). It really is easy to let oneself go, especially during the pandemic.

Graduate School

- Submitted Project 1 on virtual CPU scheduler and memory coordinator

- Learned a ton from watching lectures on mutual exclusion and barrier synchronization (see section above on What I Learned)

- Finished watching most of the lecture series on parallel systems but still need to finish Scheduling and Shared Memory Multiprocessor OS

Music

- Guitar lesson with Jared last Sunday. Focused on introducing inversions as a way to spice things up with my song writing.