In this blog post, you’ll learn how to deploy Python based worker using Digital Ocean’s Cloud App Platform for only $5.00 per month — all in less than 5 minutes.

Deploying a long running process

Imagine you’re building an designing a distributed system and as part of that software architecture, you have a Linux process that needs to run indefinitely. The process constantly checks a queue (e.g. RabbitMQ, Amazon SQS) and upon receiving a message, will either send an email notification or perform data aggregation. Regardless of the exact work that needs to being carried out, the process needs to be: always on, always running.

Alternative deployment options

A long running process can be deployed in a variety of ways, each with its own trade offs. Sure, you can launch an AWS EC2 instance and deploy your program like any other Linux process but that’ll requires additional scripting to stop/start,restart the process; in addition, you need to maintain and monitor the server, not to mention the unnecessary overprovisioning of the compute and memory resources.

Another option is to modify the program such that it’s short lived. The process starts, performs some body of work, then exits. This modification to the program allows you to deploy the program to AWS Lambda, which can be configured to invoke the job at certain intervals (e.g. one minute, five minutes); this adjustment to the program is necessary since Lambda is designed to run short-lived jobs, having a maximum runtime of 15 minutes.

Or, you can (as covered in this post), deploy a long running process in Digital Ocean using their App Cloud Platform.

Code sample

Below is a snippet of code. I removed most of the boiler plate and kept only the relevant section: the while loop that performs the body of work. For the full source code in this example, you can find it in example-github github repository

while (proc_runtime_in_secs < MAX_PROC_RUNTIME_IN_SECONDS):

logger.info("Proc running for %d seconds", proc_runtime_in_secs)

start = time.monotonic()

logger.info("Doing some work")

work_for = random.randint(MIN_SLEEP_TIME_IN_SECONDS,

MAX_SLEEP_TIME_IN_SECONDS)

elapsed_worker_loop_time_start = time.monotonic()

elapsed_worker_loop_time_end = time.monotonic()

while ((elapsed_worker_loop_time_end - elapsed_worker_loop_time_start) < work_for):

elapsed_worker_loop_time_end = time.monotonic()

pass

logger.info("Done working for %d", work_for)

end = time.monotonic()

proc_runtime_in_secs += end - startIf you are curious about why I’m periodically exiting the program after a certain amount of time, it’s a way to increase robustness. I’ll cover this concept in more detail in a separate post but for now, check out the bonus section at the bottom of this post.

Testing out this program locally

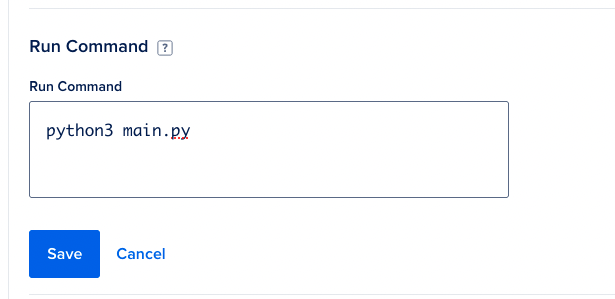

With the code checked out locally, you can launch the above program with the following command: python3 main.py.

Setting up Buildpack

Digital Ocean needs to detect your build and runtime enviroinment. Detection is made possible with build packs. For Python based applications, Digital Ocean scans the repository, searching for one of these three files:

- requirements.txt

- Pipfile

- setup.py

In our example code repository, I’ve defined a requirements.txt (which is empty since there are no dependencies I declared) to ensure that Digital Ocean detects our repository as a Python based application.

Bonus Tip: Pinning the runtime

While not necessary, you should always pin your Python version runtime as a best practice. If you writing locally using Python-3.9.13, then the remote environment should also run the same version. Version matching saves yourself future head aches: a mismatch between your local Python runtime and Digital Ocean’s Python runtime can cause unnecessary and avoidable debugging sessions.

Runtime.txt

python-3.9.13Step by Step – Deploying your worker

Follow the below steps on deploying your Python Github Repository as a Digital Ocean worker.

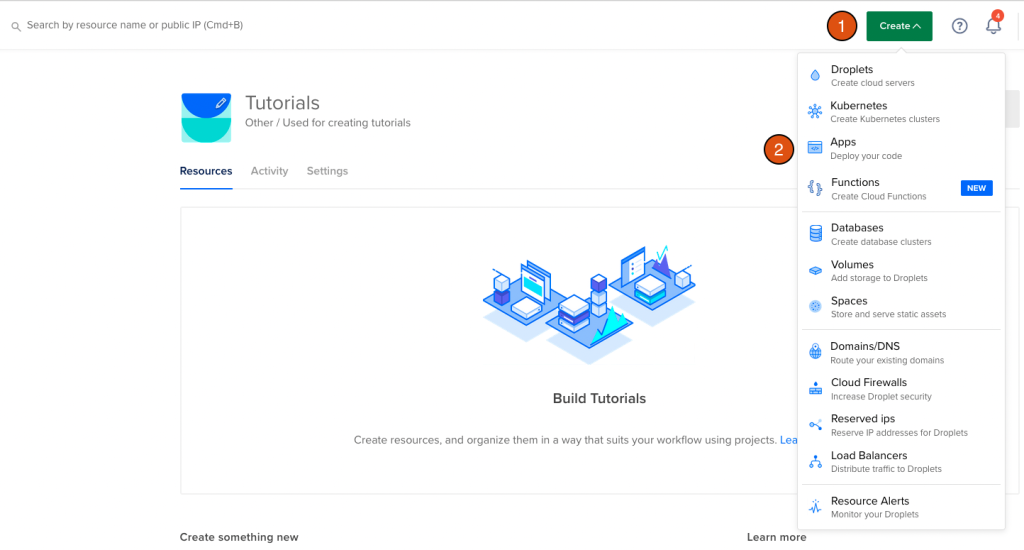

1. Creating a Digital Ocean “App”

Log into your digital ocean account and in the top right corner, click “Create” and then select “Apps”.

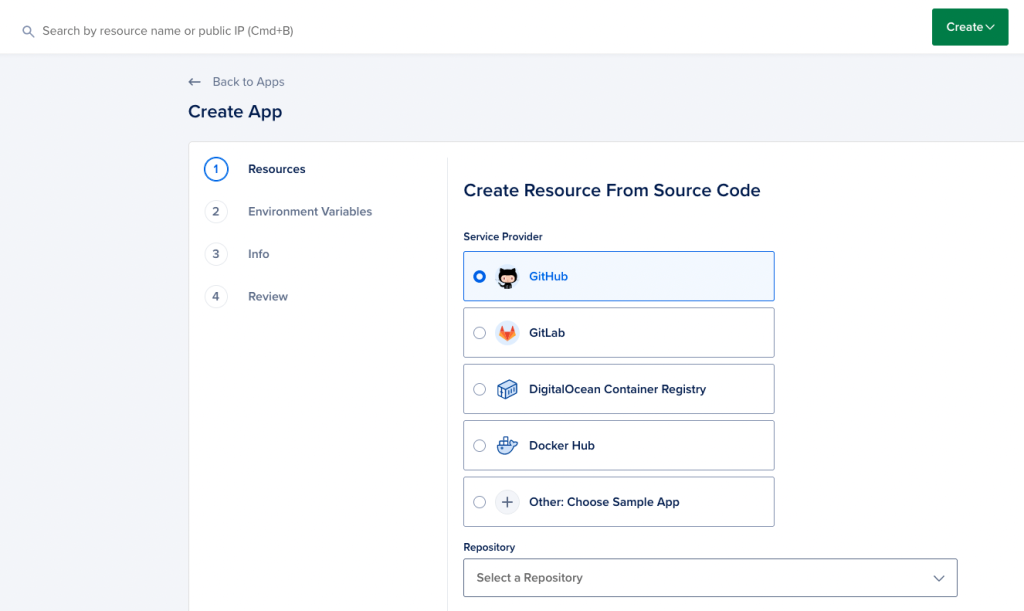

2. Configuring the resource

Select your “Service Provider”. In this example, I’m using GitHub, where this repository is hosted

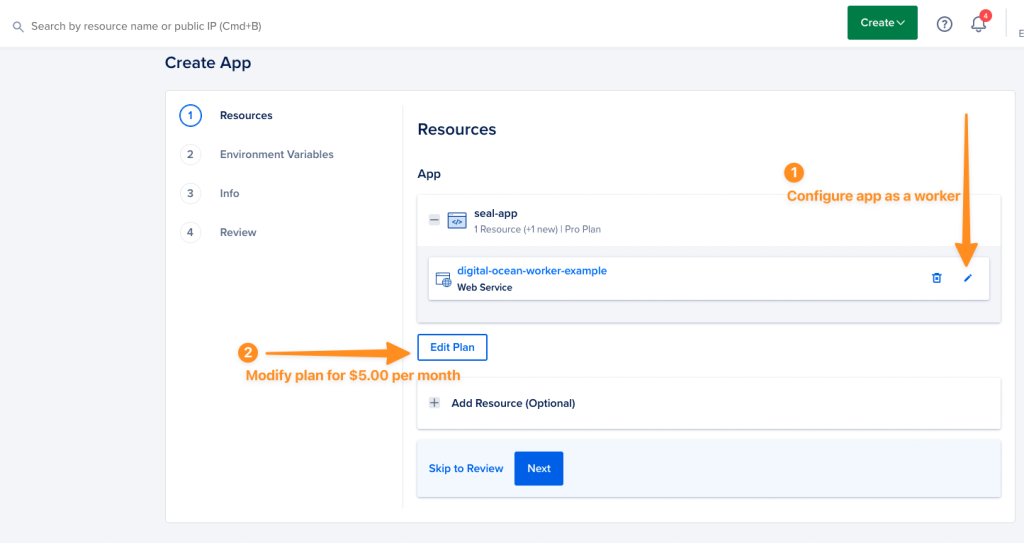

Then, you need to configure the application as a worker and edit the plan from the default price of $15.00 per month.

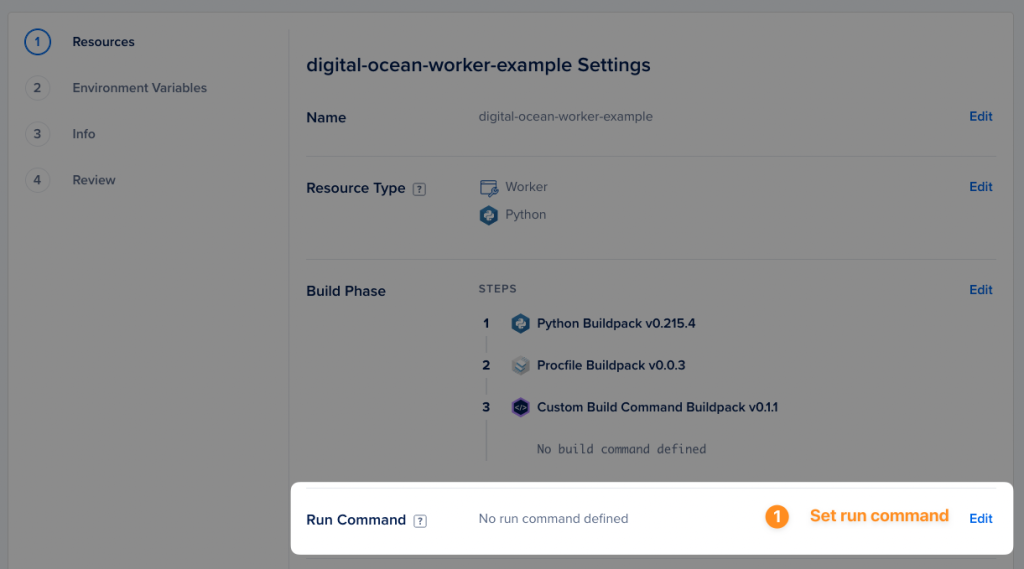

2a – Configure app as a worker

By default, Digital Ocean assumes that you are building a web service. In this case, we are deploying a worker so select “worker” from the drop down menu.

2b – Edit the plan

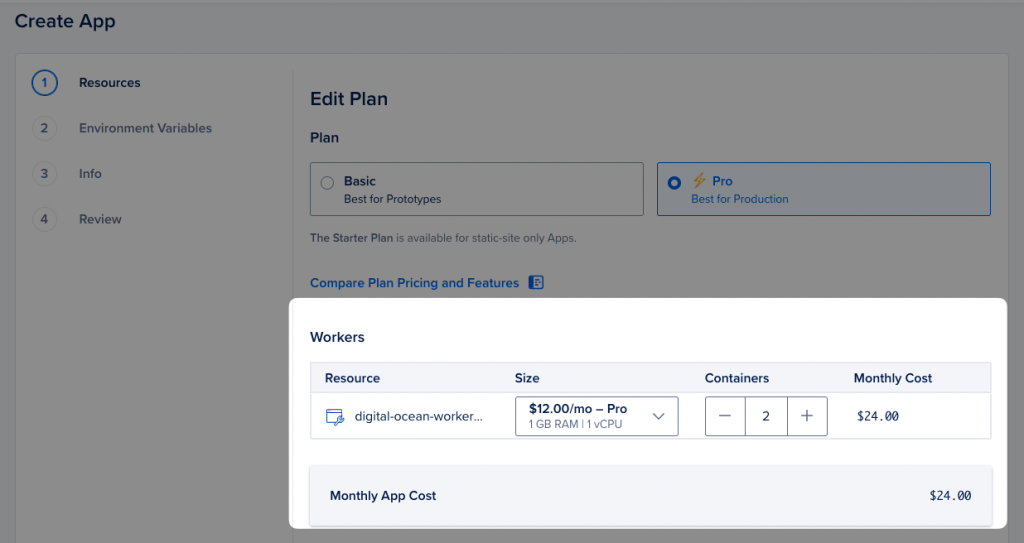

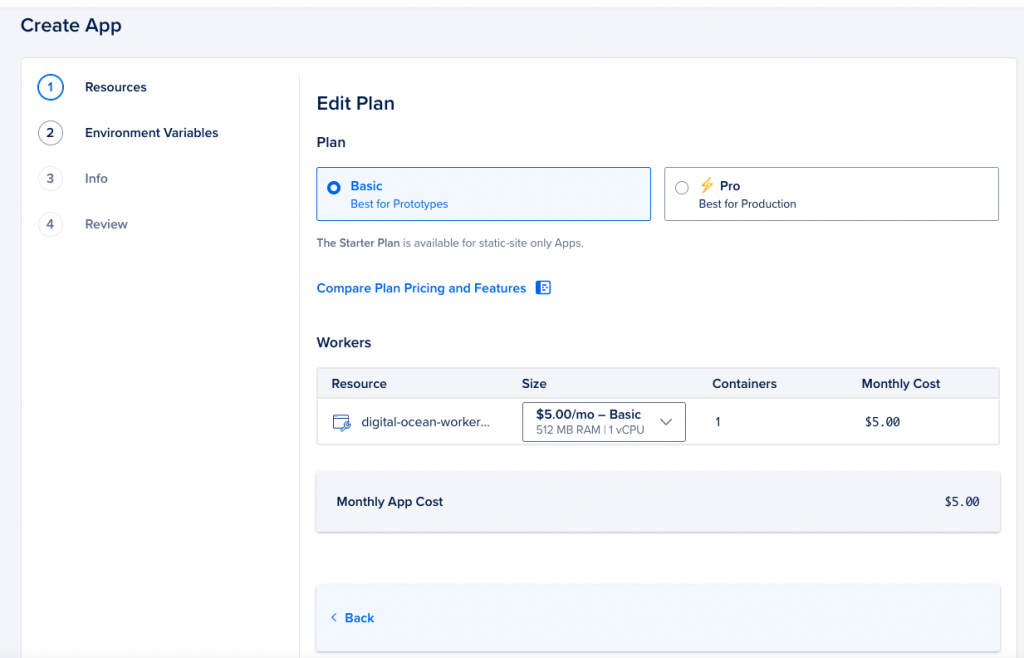

By default, Digital Ocean chooses a worker with 1GB ram and 1vCPU, costing $24.00 per month. In this example, we do NOT need that entire memory footprint and can get away with half the memory requirements. So let’sw choose 512MB ram, dropping the cost down to $5.00

Select the “Basic Plan” radio button and adjust the resource size from 1GB RAM to 512 MB ram.

Configure the run command

Although we provided other files (i.e. requirements.txt) so that Digital Ocean detects the application as a Python program, we still need to specify wihch command will actually run.

You’re done!

That’s it! Select your datacenters (e.g. New York, San Francisco) and then hit that save button.

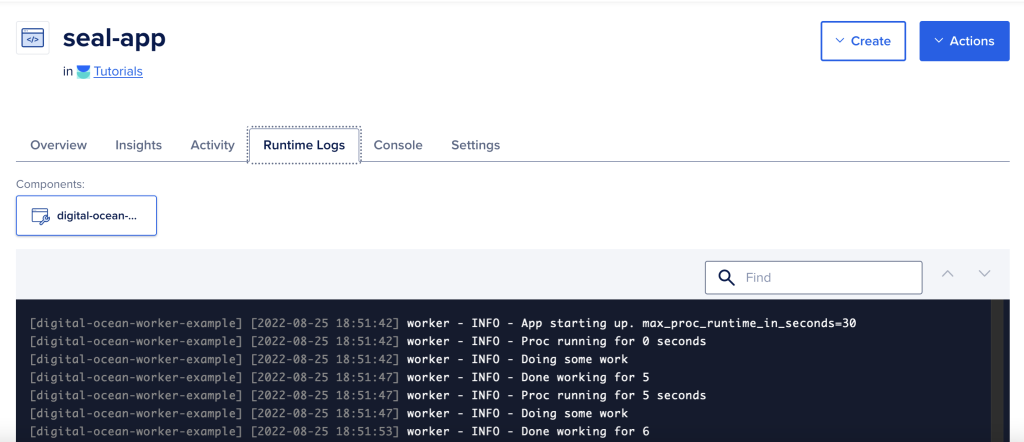

The application will now be deployed and within a few minutes, you’ll be able to monitor the application by reviewing the Runtime logs.

Monitoring the runtime logs

In our sample application, we are writing to standard output/standard error. By doing writing to these file handles, Digital Ocean will capture these messages and log them for you, including a timestamp. Useful for debugging and troubleshooting errors or if your application crashes.

Bonus: Automatic restart of your process

If you worker crashes, Digital Ocean monitors the process and will automatically start it. That means, no need to have a control process that forks your process and monitors the PID.

Who is my audience here? Self-taught developers who want to deploy their application cost effectively, CTOs who are trying to minimize cost for running a long running process

Summary

So in this post, we took your long running worker (Python) process and deployed it on Digital Ocean for $5.00 per month!

References

- https://docs.aws.amazon.com/whitepapers/latest/how-aws-pricing-works/aws-lambda.html

- https://docs.digitalocean.com/products/app-platform/reference/buildpacks/python/